These are the ramblings of Matthijs Kooijman, concerning the software he hacks on, hobbies he has and occasionally his personal life.

Most content on this site is licensed under the WTFPL, version 2 (details).

Questions? Praise? Blame? Feel free to contact me.

My old blog (pre-2006) is also still available.

See also my Mastodon page.

- Raspberry pi powerdown and powerup button (45)

- Repurposing the "Ecobutton" to skip spotify songs using Linux udev/hwdb key remapping (3)

- How to resize a cached LVM volume (with less work) (2)

- Reliable long-distance Arduino communication: RS485 & MODBUS? (6)

- USB, Thunderbolt, Displayport & docks (21)

| Sun | Mon | Tue | Wed | Thu | Fri | Sat |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 |

(...), Arduino, AVR, BaRef, Blosxom, Book, Busy, C++, Charity, Debian, Electronics, Examination, Firefox, Flash, Framework, FreeBSD, Gnome, Hardware, Inter-Actief, IRC, JTAG, LARP, Layout, Linux, Madness, Mail, Math, MS-1013, Mutt, Nerd, Notebook, Optimization, Personal, Plugins, Protocol, QEMU, Random, Rant, Repair, S270, Sailing, Samba, Sanquin, Script, Sleep, Software, SSH, Study, Supermicro, Symbols, Tika, Travel, Trivia, USB, Windows, Work, X201, Xanthe, XBee

&

&

(With plugins: config, extensionless, hide, tagging, Markdown, macros, breadcrumbs, calendar, directorybrowse, feedback, flavourdir, include, interpolate_fancy, listplugins, menu, pagetype, preview, seemore, storynum, storytitle, writeback_recent, moreentries)

Valid XHTML 1.0 Strict & CSS

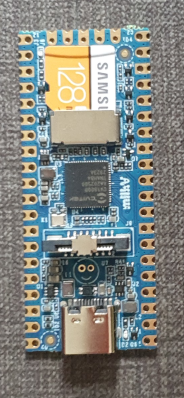

For a project (building a low-power LoRaWAN gateway to be solar powered) I am looking at simple and low-power linux boards. One board that I came across is the Milk-V Duo, which looks very promising. I have been playing around with it for just a few hours, and I like the board (and its SoC) very much already - for its size, price and open approach to documentation.

The board itself is a small (21x51mm) board in Raspberry Pi Pico form factor. It is very simple - essentially only a SoC and regulators. The SoC is the CV1800B by Sophgo, (a vendor I had never heard of until now, seems they were called CVITEK before). It is based on the RISC-V architecture, which is nice. It contains two RISC-V cores (one at 1Ghz and one at 700Mhz), as well as a small 8051 core for low-level tasks. The SoC has 64MB of integrated RAM.

The SoC supports the usual things - SPI, I²C, UART. There is also a CSI (camera) connector and some AI accelaration block, it seems the chip is targeted at the AI computer vision market (but I am ignoring that for my usecase). The SoC also has an ethernet controller and PHY integrated (but no ethernet connector, so you still need an external magjack to use it). My board has an SD card slot for booting, the specs suggest that there might also be a version that has on-board NAND flash instead of SD (cannot combine both, since they use the same pins).

There are two other variants - the Duo 256M with more RAM (board is identical except for 1 extra power supply, just uses a different SoC with more RAM) and the Duo S (in what looks like Rock Pi S form factor) which adds an ethernet and USB host port. I have not tested either of these and they use a different SoC series (SG200x) of the same chip vendor, so things I write might or might not be applicable to them (but the chips might actually be very similar internally, the CVx to SGx change seems to be related to the company merger, not necessarily technical differences).

The biggest (or at least most distinguishing) selling point, to me, is that both the chip and board manufacturers seem to be going for a very open approach. In particular:

Full datasheets for the SoC are available (the datasheets could be a bit more detailed, but I am under the impression that this is still a bit of a work-in-progress, not that there is a more detailed datasheet under NDA).

The tables (e.g. pinout tables) are not in the datasheet PDF, but separately distributed as a spreadsheet, which is super convenient.

For the the SG200x chips, the datasheet is created using reStructuredText (a text format a bit like markdown but more expressive), and the rst source files are available on github under a BSD license. This is really awesome: it makes contributions to the documentation very easy, and (if they structure things properly when more chips are added) could make it very easy to see what peripherals are the same or different between different chips.

Sophgo seems to be actively trying to get their chips supported by mainline linux (maybe by contributing code directly, or at least by supporting the community to do so), which should make it a lot easier to work with their chips in the future.

Other vendors often just drop a heavily customized and usually older kernel or BSP out there, sometimes updating it to newer versions but not always, and relying on other people to do the work of cleaning up the code and submitting it to linux.

The second core can be used as a microcontroller and Milk-V supports running FreeRTOS on it it, and provides an Arduino core for it (have not looked if it is any good yet). It seems the first core then remains active running Linux, providing a way to flash the secondary core through the primary core.

All this is based on fairly quick observations, so maybe things are not as open and nice and they seem to be at first glance, but it looks like something cool is going on here at least.

Other things I like about the board:

- It is small and simple and fits in a breadboard.

- It is power-efficient (first measurement with the default buildroot-based linux idles at 200mW-250mW - around 45mA at 5V, which is a lot less than other SBC's I have tried so far. This was just a quick measurement, I still have to do more (detailed) measurements with load and under other circumstances.

- It is cheap: Only $5 for the 64M Duo.

- The packaging is very neat: A very tightly fitting cardboard box (and antistatic bag of course).

- The default image presents itself as an USB ethernet device, allowing direct connectivity via USB.

There are also some (potential) downsides that might complicate matters: - Only 64MB RAM is very limited. In practice, some RAM is used for peripherals (I think) too, the default buildroot image has something like half of the RAM available to Linux. Other images configure this differently so full RAM is available to the kernel (leaving 55M for userspace). See this forum topic for more details.

Low memory limits options - people have reported apt needs around 50M to work, which means it ends up using swap and is super slow.

The official Linux distribution from milk-v is a buildroot-built image, which means all software is built into the image directly, no package manager to install extra software afterwards.

The buildroot files are available, so it should be easy too build your own image with extra software, though I think this does mean compiling everything from source.

There does seem to be a lively community of people that are working on making other distributions work on these boards. In most cases this means building a custom kernel for this board (with milk-v/sophgo patches, often using buildroot) and combining it with existing RISC-V packages or a rootfs from these distributions. Sometimes with instructions or a script, sometimes someone just hand-edited an image to make it work.

Hopefully proper support can be added into the actual distributions as well, though a lot of distributions do not really have the machinery to create bootable images for specific boards (i.e. they only support building images for generic BIOS or EFI booting). One distribution that does have this is Armbian, but that is Debian/apt-based so probably needs more than 64MB RAM.

I have briefly tried the Alpine and Arch linux images that are available. Alpine is really tiny, but like the official buildroot image uses musl libc. This is nice and tiny, but not all software plays well with it (and in all cases I think software must be compiled specifically against musl). The main application I needed (The basicstation LoRaWAN forwarder) did not like being compiled against musl (and I did not feel like fixing that, especially since I am doubtful such changes would be merged upstream).

So I am hoping I can use the Arch image, which does use glibc and seems to run basicstation (at least it starts, I have not had the time to reallly set it up yet). Or maybe a Debian/Ubuntu/Armbian image after all - I have also ordered the 256M version (which was not in stock initially).

For an overview of various images created by the community, see this excellent overview page.

It is not entirely clear to me what bootloader is used and how the devicetree is managed. On most single-board linux devices I know, there is u-boot with a boot script, which can be configured to load different devicetrees and overlays to allow configuring the hardware (e.g. remapping pins as SPI or I²C pins). On the buildroot-image for the Duo, I could find no evidence of any of this in

/boot, but I did see u-boot being mentioned in some places, so maybe it is just configured differently.Even though the documentation is very open, some of it is a bit hard to find and spread around. Here's some places I found:

- spreadsheets and PDF datasheets for CV1800B chip and schematics for the Duo board: https://github.com/milkv-duo/duo-files

- Datasheets (rst source and PDF) for the SG200x chips: https://github.com/sophgo/sophgo-doc/

- Documentation about the Milk-v boards: https://milkv.io/docs/duo/overview

- Links to Cvitek docs (which I could not find linked from anywhere else): https://milkv.io/docs/duo/resources/mmf

- The milk-v forum about the Duo (mostly English, partly chinese): https://community.milkv.io/c/duo/5

- The English part of the Sophgo forum about the Duo: https://forum.sophgo.com/c/duo-180-english-forum/12

- The Milk-V chip pages have a bunch of PDF documents for each chip: https://milkv.io/chips

The USB datapins are available externally, but only on two pads that need pogopins or something like that to connect to them. Would have been more convenient if these had a proper pin header.

Some of the hardware setup is done with shell scripts that run on startup (for example the USB networking), some of which actually do raw register writes. This is probably something that will be fixed when kernel support improves, but can be fragile until then.

Sales are still quite limited - most of the suppliers linked from the manufacturer pages seem to be China-only, and I have not found the boards at any European webshop yet. I have now ordered from Arace Tech, a chinese webshop that ships internationally and worked well for me (except for to-be-expected long shipping times of a couple of weeks).

So, that was my first impression and thoughts. If I manage to get things running and use this board as part of my LoRaWAN gateway design, I'll probably post a followup with some more experiences. If you have used this board and have things to share, I'm happy to hear them!

On my server, I use LVM for managing partitions. I have one big "data" partition that is stored on an HDD, but for a bit more speed, I have an LVM cache volume linked to it, so commonly used data is cached on an SSD for faster read access.

Today, I wanted to resize the data volume:

# lvresize -L 300G tika/data

Unable to resize logical volumes of cache type.

Bummer. Googling for the error message showed me some helpful posts here and here that told me you have to remove the cache from the data volume, resize the data volume and then set up the cache again.

For this, they used lvconvert --uncache, which detaches and deletes

the cache volume or cache pool completely, so you then have to recreate

the entire cache (and thus figure out how you created it in the first

place).

Trying to understand my own work from long ago, I looked through

documentation and found the lvconvert --splitcache in

lvmcache(7), which detached a cache volume or cache pool,

but does not delete it. This means you can resize and just reattached

the cache again, which is a lot less work (and less error prone).

For an example, here is how the relevant volumes look:

# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data tika Cwi-aoC--- 300.00g [data-cache_cvol] [data_corig] 2.77 13.11 0.00

[data-cache_cvol] tika Cwi-aoC--- 20.00g

[data_corig] tika owi-aoC--- 300.00g

Here, data is a "cache" type LV that ties together the big data_corig LV

that contains the bulk data and small data-cache_cvol that contains the

cached data.

After detaching the cache with --splitcache, this changes to:

# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data tika -wi-ao---- 300.00g

data-cache tika -wi------- 20.00g

I think the previous data cache LV was removed, data_corig was renamed to

data and data-cache_cvol was renamed to data-cache again.

The actual resize

Armed with this knowledge, here's how the ful resize works:

lvconvert --splitcache tika/data

lvresize -L 300G tika/data @hdd

lvconvert --type cache --cachevol tika/data-cache tika/data --cachemode writethrough

The last command might need some additional parameters depending on how you set

up the cache in the first place. You can view current cache parameters with

e.g. lvs -a -o +cache_mode,cache_settings,cache_policy.

Cache pools

Note that all of this assumes using a cache volume an not a cache pool. I was originally using a cache pool setup, but it seems that a cache pool (which splits cache data and cache metadata into different volumes) is mostly useful if you want to split data and metadata over different PV's, which is not at all useful for me. So I switched to the cache volume approach, which needs fewer commands and volumes to set up.

I killed my cache pool setup with --uncache before I found out about

--splitcache, so I did not actually try --splitcache with a cache pool, but

I think the procedure is actually pretty much identical as described above,

except that you need to replace --cachevol with --cachepool in the last

command.

For reference, here's what my volumes looked like when I was still using a cache pool:

# lvs -a

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data tika Cwi-aoC--- 260.00g [data-cache] [data_corig] 99.99 19.39 0.00

[data-cache] tika Cwi---C--- 20.00g 99.99 19.39 0.00

[data-cache_cdata] tika Cwi-ao---- 20.00g

[data-cache_cmeta] tika ewi-ao---- 20.00m

[data_corig] tika owi-aoC--- 260.00g

This is a data volume of type cache, that ties together the big data_corig

LV that contains the bulk data and a data-cache LV of type cache-pool that

ties together the data-cache_cdata LV with the actual cache data and

data-cache_cmeta with the cache metadata.

Interesting, thanks for your post. --splitcache sounds very neat but as far as I can tell the main advantage is speed (vs --uncache). When you run lvconvert to restore the existing cache you are only allowed to proceed if you accept that the entire existing cache contents are wiped.

Yeah, I think the end result is the same, it's just easier to use --splitcache indeed.

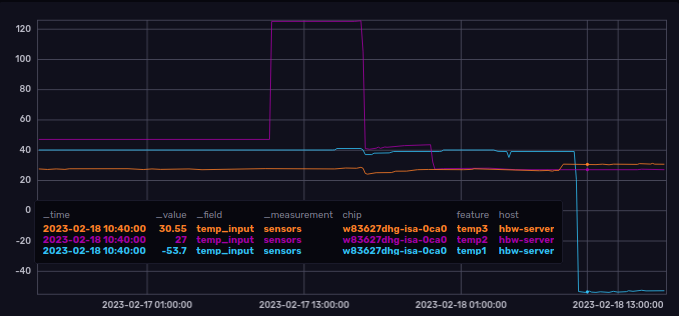

A few months ago, I put up an old Atom-powered Supermicro server (SYS-5015A-PHF) again, to serve at De War to collect and display various sensor and energy data about our building.

The server turned out to have an annoying habit: every now and then it would start beeping (one continuous annoying beep), that would continue until the machine was rebooted. It happened sporadically, but kept coming back. When I used this machine before, it was located in a datacenter where nobody would care about a beep more or less (so maybe it has been beeping for years on end before I replaced the server), but now it was in a server cabinet inside our local Fablab, where there are plenty of people to become annoyed by a beeping server...

I eventually traced this back to faulty sensor readings and fixed this by disabling the faulty sensors completely in the server's IPMI unit, which will hopefully prevent the annoying beep. In this post, I'll share my steps, in case anyone needs to do the same.

Shorting fan pins

At first, I noticed that there was an alarm displayed in the IPMI webinterface for one of the fans. Of course it makes sense to be notified of a faulty fan, except that the system did not have any fans connected... It did show the fan speed as 0RPM (or -2560RPM depending on where you looked) as expected, so I suspected it would start up realizing there was no fan but then sporadically seeing a bit of electrical noise on the fan speed pin, causing it to mark the fan as present and immediately as not running, triggering the alarm. I tried to fix this by shorting the fan speed detection pins to the GND pins to make it more noise-resilient.

Temperatures also wonky

However, a couple of weeks later, the server started beeping again. This time I

looked a bit more closely, and found that the problem was caused by too high

temperature this time. The IPMI system event log (queried using ipmi-sel)

showed:

43 | Feb-17-2023 | 09:18:58 | CPU Temp | Temperature | Upper Non-critical - going high ; Sensor Reading = 125.00 C ; Threshold = 85.00 C

44 | Feb-17-2023 | 09:18:58 | CPU Temp | Temperature | Upper Critical - going high ; Sensor Reading = 125.00 C ; Threshold = 90.00 C

45 | Feb-17-2023 | 09:18:58 | CPU Temp | Temperature | Upper Non-recoverable - going high ; Sensor Reading = 125.00 C ; Threshold = 95.00 C

46 | Feb-17-2023 | 16:26:16 | CPU Temp | Temperature | Upper Non-recoverable - going high ; Sensor Reading = 41.00 C ; Threshold = 95.00 C

47 | Feb-17-2023 | 16:26:16 | CPU Temp | Temperature | Upper Critical - going high ; Sensor Reading = 41.00 C ; Threshold = 90.00 C

48 | Feb-17-2023 | 16:26:16 | CPU Temp | Temperature | Upper Non-critical - going high ; Sensor Reading = 41.00 C ; Threshold = 85.00 C

This is abit opaque, but the events at 9:18 show the temperature was read as 125°C - clearly indicating a faulty sensor. These are (I presume) the "asserted" events for each of the thresholds that this sensor has. Then at 16:26, the server was rebooted and the sensor read 41°C again (which I believe is still higher than realistic) and each of the thresholds emits a "deasserted" event.

Looking back, I noticed that the log showed events for both fans and both

temperature sensors, so it seemed all of these sensors were really wonky. I

could also see the incorrect temperatures clearly in the sensor data I had been

collecting from the server (using telegraf, collected using lm-sensors from

within the linux system itself, but clearly reading from the same sensor as

IPMI):

Note that the graph above shows two sensors, while IPMI only reads two, so I am not sure what the third one is. The alarm from the IPMI log is shown clearly as a sudden jump of the temp2 purple line (jumping back down when the server was rebooted). But also note an unexplained second jump down a few hours later, and note that the next day temp1 dives down to -53°C for some reason, which also matches what IPMI reads:

$ sudo ipmitool sensor

System Temp | -53.000 | degrees C | nr | -9.000 | -7.000 | -5.000 | 75.000 | 77.000 | 79.000

CPU Temp | 27.000 | degrees C | ok | -11.000 | -8.000 | -5.000 | 85.000 | 90.000 | 95.000

CPU FAN | -2560.000 | RPM | nr | 400.000 | 585.000 | 770.000 | 29260.000 | 29815.000 | 30370.000

SYS FAN | -2560.000 | RPM | nr | 400.000 | 585.000 | 770.000 | 29260.000 | 29815.000 | 30370.000

CPU Vcore | 1.160 | Volts | ok | 0.640 | 0.664 | 0.688 | 1.344 | 1.408 | 1.472

Vichcore | 1.040 | Volts | ok | 0.808 | 0.824 | 0.840 | 1.160 | 1.176 | 1.192

+3.3VCC | 3.280 | Volts | ok | 2.816 | 2.880 | 2.944 | 3.584 | 3.648 | 3.712

VDIMM | 1.824 | Volts | ok | 1.448 | 1.480 | 1.512 | 1.960 | 1.992 | 2.024

+5 V | 5.056 | Volts | ok | 4.096 | 4.320 | 4.576 | 5.344 | 5.600 | 5.632

+12 V | 11.904 | Volts | ok | 10.368 | 10.496 | 10.752 | 12.928 | 13.056 | 13.312

+3.3VSB | 3.296 | Volts | ok | 2.816 | 2.880 | 2.944 | 3.584 | 3.648 | 3.712

VBAT | 2.912 | Volts | ok | 2.560 | 2.624 | 2.688 | 3.328 | 3.392 | 3.456

Chassis Intru | 0x0 | discrete | 0x0000| na | na | na | na | na | na

PS Status | 0x1 | discrete | 0x01ff| na | na | na | na | na | na

Note that the voltage sensors show readings that do make sense, and looking at the history, they show no sudden jumps, so those are probably still reliably (even though they are read from the same sensor chip according to lm-sensors).

Disabling the sensors

It seems you can disable generation of events when a threshold is crossed, can even disable reading the sensor entirely. Hopefully this will also prevent the BMC from beeping on weird sensor values.

To disable things, I used ipmi-sensor-config (from the freeipmi-tools

Debian package):

First I queried the current sensor configuration:

sudo ipmi-sensors-config --checkout > ipmi-sensors-config.txtThen I edited the generated file, setting

Enable_All_Event_MessagesandEnable_Scanning_On_This_SensortoNo. I also had to set the hysteresis values for the fans toNone, since the -2375 value generated by--checkoutwas refused when writing back the values in the next step.Commited the changes with:

sudo ipmi-sensors-config --commit --filename ipmi-sensors-config.txt

I suspect that modifying Enable_All_Event_Messages allows the sensor to be

read, but prevents the threshold from being checked and generating events

(especially since this setting seems to just clear the corresponding setting

for each available threshold, so it seems you can also use this to disable some

of the thresholds and keep some others). However, it is not entirely clear to

me if this would just prevent these events from showing up in the event log, or

if it would actually prevent the system from beeping (when does the system

beep? On any event? Specific events? This is not clear to me).

For good measure, I decided to also modify Enable_Scanning_On_This_Sensor,

which I believe prevents the sensor from being read at all by the BMC, so that

should really prevent alarms. This also causes ipmitool sensor to display

value and status as na for these sensors. The sensors command (from the

lm-sensors package) can still read the sensor without issues, though the

values are not very useful anyway...).

Note that apparently these settings are not always persistent across reboots and powercycles, so make sure you test that. For this particular server, the settings survive across a reboot, I have not tested a hard power cycle yet.

I cannot yet tell for sure if this has fixed the problem (only applied the changes today), but I'm pretty confident that this will indeed keep the people in our Fablab happy (and if not - I'll just solder off the beeper from the motherboard, but let's hope I will not have to resort to such extreme measures...).

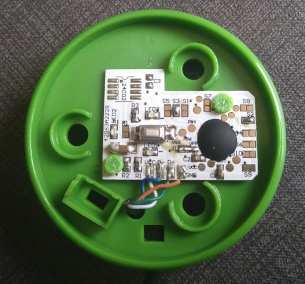

When sorting out some stuff today I came across an "Ecobutton". When you attach it through USB to your computer and press the button, your computer goes to sleep (at least that is the intention).

The idea is that it makes things more sustainable because you can more easily put your computer to sleep when you walk away from it. As this tweakers poster (Dutch) eloquently argues, having more plastic and electronics produced in China, shipped to Europe and sold here for €18 or so probably does not have a net positive effect on the environment or your wallet, but well, given this button found its way to me, I might as well see if I can make it do something useful.

I had previously started a project to make a "Next" button for spotify that you could carry around and would (wirelessly - with an ESP8266 inside) skip to the next song using the Spotify API whenever you pressed it. I had a basic prototype working, but then the project got stalled on figuring out an enclosure and finding sufficiently low-power addressable RGB LEDs (documentation about this is lacking, so I resorted to testing two dozen different types of LEDs and creating a website to collect specs and test results for adressable LEDs, which then ended up with the big collection of other Yak-shaving projects waiting for this magical moment where I suddenly have a lot of free time).

In any case, it seemed interesting to see if this Ecobutton could be used as poor-man's spotify next button. Not super useful, but at least now I can keep the button around knowing I can actually use it for something in the future. I also produced some useful (and not readily available) documentation about remapping keys with hwdb in the process, so it was at least not a complete waste of time... Anyway, into the technical details...

How does it work?

I expected this to be an USB device that advertises itself as a keyboard, and then whenever you press the button, sends the "sleep" key to put the computer to sleep.

Turns out the first part was correct, but instead of sending a sleep keypress, it sends Meta-R, ecobutton (it types each of these letters after each other), enter. Seems you need to install a tool on your pc that is executed by using the windows-key+R shortcut. Pragmatic, but quite ugly, especially given a sleep key exists... But maybe Windows does not implement the key (or maybe this tool also changes some settings for deeper sleep, at least that's what was suggested in the tweakers post linked above).

Replacing the firmware?

I considered I could maybe replace the firmware to make the device send whatever keystroke I wanted, but writing firmware from scratch for existing hardware is not the easiest project (even for a simple device like this). After opening the device I decided this was not a feasible route.

The (I presume) microcontroller in there is hidden in a blob, so no indication as to its type, pin connections, programming ports (if it actually has flash and is not ROM only).

I did notice some solder jumpers that I figured could influence behavior (maybe the same PCB is used for differently branded buttons), but shorting S1 or S5 did not seem to change behavior (maybe I should have unsoldered S3, but well...).

Remap keys, then?

The next alternative is to remap keys on the computer. Running Linux, this should certainly be possible in a couple of dozen ways. This does need to be device-specific remapping, so my normal keyboard still works as normal, but if I can unmap all keys except for the meta key that it presses first, and map that to someting like the KEY_NEXTSONG (which is handled by spotify and/or Gnome already), that might work.

I first saw some remapping solutions for X, but those probably will not work - I'm running wayland and I prefer something more low-level. I also found cool remapping daemons (like keyd) that grab events from a keyboard and then synthesise new events on a virtual keyboard, allowing cool things like multiple layers or tapping shift and then another key to get uppercase instead of having to hold shift and the key together, but that is way more complicated than what I need here.

Then I found that udev has a thing called "hwdb", which allows putting

files in /etc/udev/hwdb.d that match specific input devices and can

specify arbitrary scancode-to-keycode mappings for them. Exactly what

I needed - works out of the box, just drop a file into /etc.

Understanding and taming udev and hwdb

The challenge turned out to be to figure out how to match against my

specific keyboard identifier, what scancodes and keycodes to use, and

in general figure out how the ecosystem around this works (In short:

when plugging in a device, udev rules consults the hwdb for extra device

properties, which a udev builtin keyboard command then uses to apply

key remappings in the kernel using an ioctl on the /dev/input/eventxx

device). In case you're wondering - this means you do not need to use

hwdb, you can also apply this from udev rules directly, but then you

need a bit more care.

I've written down everything I figured about hwdb in a post on Unix stackexchange, so I'l not repeat everything here.

Using what I had learnt, getting the button to play nice was a matter of

creating /etc/udev/hwdb.d/99-ecobutton.hwdb containing:

evdev:input:b????v3412p7856e*

KEYBOARD_KEY_700e3=nextsong # LEFTMETA

KEYBOARD_KEY_70015=reserved # R

KEYBOARD_KEY_70008=reserved # E

KEYBOARD_KEY_70006=reserved # C

KEYBOARD_KEY_70012=reserved # O

KEYBOARD_KEY_70005=reserved # B

KEYBOARD_KEY_70018=reserved # U

KEYBOARD_KEY_70017=reserved # T

KEYBOARD_KEY_70011=reserved # N

KEYBOARD_KEY_70028=reserved # ENTER

This matches the keyboard based on its usb vendor and product id (3412:7856) and then disables all keys that are used by the button, except for the first, and remaps that to KEY_NEXTSONG.

To apply this new file, run sudo systemd-hwdb update to recompile the

database and then replug the button to apply it (you can also re-apply

with udevadm trigger, but it seems then Gnome does not pick up the

change, I suspect because the gnome-settings media-keys module checks

only once whether a keyboard supports media keys at all and ignores it

otherwise).

With that done, it now produces KEY_NEXTSONG events as expected:

$ sudo evtest --grab /dev/input/by-id/usb-3412_7856-event-if00

Input driver version is 1.0.1

Input device ID: bus 0x3 vendor 0x3412 product 0x7856 version 0x100

Input device name: "HID 3412:7856"

[ ... Snip more output ...]

Event: time 1675514344.256255, type 4 (EV_MSC), code 4 (MSC_SCAN), value 700e3

Event: time 1675514344.256255, type 1 (EV_KEY), code 163 (KEY_NEXTSONG), value 1

Event: time 1675514344.256255, -------------- SYN_REPORT ------------

Event: time 1675514344.264251, type 4 (EV_MSC), code 4 (MSC_SCAN), value 700e3

Event: time 1675514344.264251, type 1 (EV_KEY), code 163 (KEY_NEXTSONG), value 0

Event: time 1675514344.264251, -------------- SYN_REPORT ------------

More importantly, I can now skip annoying songs (or duplicate songs - spotify really messes this up) with a quick butttonpress!

Maybe you missed the fact that it is also possible to keep the button pressed in order to open a website. This could be used for another function by mapping a key that is only included in that link like /.

To map out the other url keys, my file now looks like this:

evdev:input:b????v3412p7856e*

KEYBOARD_KEY_700e3=nextsong # LEFTMETA

KEYBOARD_KEY_70015=reserved # R

KEYBOARD_KEY_70008=reserved # E

KEYBOARD_KEY_70006=reserved # C

KEYBOARD_KEY_70012=reserved # O

KEYBOARD_KEY_70005=reserved # B

KEYBOARD_KEY_70018=reserved # U

KEYBOARD_KEY_70017=reserved # T

KEYBOARD_KEY_70011=reserved # N

KEYBOARD_KEY_7000b=reserved # H

KEYBOARD_KEY_70013=reserved # P

KEYBOARD_KEY_700e1=reserved # LEFTSHIFT

KEYBOARD_KEY_70054=reserved # /

KEYBOARD_KEY_7001a=reserved # W

KEYBOARD_KEY_70037=reserved # .

KEYBOARD_KEY_7002d=reserved # -

KEYBOARD_KEY_70010=reserved # M

KEYBOARD_KEY_70016=reserved # S

KEYBOARD_KEY_70033=reserved # ;

KEYBOARD_KEY_70028=reserved # ENTER

When the button is pressed for 3 seconds, the same happens as when pressed shortly.

> Maybe you missed the fact that it is also possible to keep the button pressed in order to open a website. This could be used for another function by mapping a key that is only included in that link like /.

Ah, I indeed missed that. Thanks for pointing that out and the updated file :-)

Recently, a customer asked me te have a look at an external hard disk he was using with his Macbook. It would show up a file listing just fine, but when trying to open actual files, it would start failing. Of course there was no backup, but the files were very precious...

This started out as a small question, but ended up in an adventure that spanned a few days and took me deep into the ddrescue recovery tool, through the HFS+ filesystem and past USB power port control. I learned a lot, discovered some interesting things and produced a pile of scripts that might be helpful to others. Since the journey seems interesting as well as the end result, I will describe the steps I took here, "ter leering ende vermaeck".

I started out confirming the original problem. Plugging in the disk to my Linux laptop, it showed up as expected in dmesg. I could mount the disk without problems, see the directory listing and even open up an image file stored on the disk. Opening other files didn't seem to work.

SMART

As you do with bad disks, you try to get their SMART data. Since

smartctl did not support this particular USB bridge (and I wasn't game

to try random settings to see if it worked on a failing disk), I gave up

on SMART initially. I later opened up the case to bypassing the

USB-to-SATA controller (in case the problem was there, and to make SMART

work), but found that this particular hard drive had the converter

built into the drive itself (so the USB part was directly attached to

the drive). Even later, I found out some page online (I have not saved

the link) that showed the disk was indeed supported by smartctl and

showed the option to pass to smartctl -d to make it work. SMART

confirmed that the disk was indeed failing, based on the number of

reallocated sectors (2805).

Fast-then-slow copying

Since opening up files didn't work so well, I prepared to make a

sector-by-sector copy of the partition on the disk, using ddrescue.

This tool has a good approach to salvaging data, where it tries to copy

off as much data as possible quickly, skipping data when it comes to a

bad area on disk. Since reading a bad sector on a disk often takes a lot

of time (before returning failure), ddrescue tries to steer clear of

these bad areas and focus on the good parts first. Later, it returns to

these bad areas and, in a few passes, tries to get out as much data as

possible.

At first, copying data seemed to work well, giving a decent read speed of some 70MB/s as well. But very quickly the speed dropped terribly and I suspected the disk ran into some bad sector and kept struggling with that. I reset the disk (by unplugging it) and did a few more attempts and quickly discovered something weird: The disk would work just fine after plugging it in, but after a while the speed would plummet tot a whopping 64Kbyte/s or less. This happened everytime. Even more, it happened pretty much exactly 30 seconds after I started copying data, regardless of what part of the disk I copied data from.

So I quickly wrote a one-liner script that would start ddrescue, kill it after 45 seconds, wait for the USB device to disappear and reappear, and then start over again. So I spent some time replugging the USB cable about once every minute, so I could at least back up some data while I was investigating other stuff.

Since the speed was originally 70MB/s, I could pull a few GB worth of data every time. Since it was a 2000GB disk, I "only" had to plug the USB connector around a thousand times. Not entirely infeasible, but not quite comfortable or efficient either.

So I investigated ways to further automate this process: Using hdparm

to spin down or shutdown the disk, use USB powersaving to let the disk

reset itself, disable the USB subsystem completely, but nothing seemed

to increase the speed again other than completely powering down the disk

by removing the USB plug.

While I was trying these things, the speed during those first 30 seconds dropped, even below 10MB/s at some point. At that point, I could salvage around 200MB with each power cycle and was looking at pulling the USB plug around 10,000 times: no way that would be happening manually.

Automatically pulling the plug

I resolved to further automate this unplugging and planned using an Arduino (or perhaps the GPIO of a Raspberry Pi) and something like a relay or transistor to interrupt the power line to the hard disk to "unplug" the hard disk.

For that, I needed my Current measuring board to easily

interrupt the USB power lines, which I had to bring from home. In the

meanwhile, I found uhubctl, a small tool that uses

low-level USB commands to individually control the port power on some

hubs. Most hubs don't support this (or advertise support, but simply

don't have the electronics to actually switch power, apparently), but I

noticed that the newer raspberry pi's supported this (for port 2 only,

but that would be enough).

Coming to the office the next day, I set up a raspberry pi and tried

uhubctl. It did indeed toggle USB power, but the toggle would affect

all USB ports at the same time, rather than just port 2. So I could

switch power to the faulty drive, but that would also cut power to the

good drive that I was storing the recovered data on, and I was not quite

prepared to give the good drive 10,000 powercycles.

The next plan was to connect the recovery drive through the network, rather than directly to the Raspberry Pi. On Linux, setting up a network drive using SSHFS is easy, so that worked in a few minutes. However, somehow ddrescue insisted it could not write to the destination file and logfile, citing permission errors (but the permissions seemed just fine). I suspect it might be trying to mmap or something else that would not work across SSHFS....

The next plan was to find a powered hub - so the recovery drive could

stay powered while the failing drive was powercycled. I rummaged around

the office looking for USB hubs, and eventually came up with some

USB-based docking station that was externally powered. When connecting

it, I tried the uhubctl tool on it, and found that one of its six

ports actually supported powertoggling. So I connected the failing drive

to that port, and prepared to start the backup.

When trying to mount the recovery drive, I discovered that a Raspberry pi only supports filesystems up to 2TB (probably because it uses a 32-bit architecture). My recovery drive was 3TB, so that would not work on the Pi.

Time for a new plan: do the recovery from a regular PC. I already had

one ready that I used the previous day, but now I needed to boot a

proper Linux on it (previously I used a minimal Linux image from

UBCD, but that didn't have a compiler

installed to allow using uhubctl). So I downloaded a Debian live image

(over a mobile connection - we were still waiting for fiber to be

connected) and 1.8GB and 40 minutes later, I finally had a working

setup.

The run.sh script I used to run the backup basically does this:

- Run ddrescue to pull of data

- After 35 seconds, kill ddrescue

- Tell the disk to sleep, so it can spindown gracefully before cutting the power.

- Tell the disk to sleep again, since sometimes it doesn't work the first time.

- Cycle the USB power on the port

- Wait for the disk to re-appear

- Repeat from 1.

By now, the speed of recovery had been fluctuating a bit, but was between 10MB/s and 30MB/s. That meant I was looking at some thousands up to ten thousands powercycles and a few days up to a week to backup the complete disk (and more if the speed would drop further).

Selectively backing up

Realizing that there would be a fair chance that the disk would indeed get slower, or even die completely due to all these power cycles, I had to assume I could not backup the complete disk.

Since I was making the backup sector by sector using ddrescue, this

meant a risk of not getting any meaningful data at all. Files are

typically fragmented, so can be stored anywhere on the disk, possible

spread over multiple areas as well. If you just start copying at the

start of the disk, but do not make it to the end, you will have backed

some data but the data could belong to all kinds of different files.

That means that you might have some files in a directory, but not

others. Also, a lot of files might only be partially recovered, the

missing parts being read as zeroes. Finally, you will also end up

backing up all unused space on the disk, which is rather pointless.

To prevent this, I had to figure out where all kinds of stuff was stored on the disk.

The catalog file

The first step was to make sure the backup file could be mounted (using a loopback device). On my first attempt, I got an error about an invalid catalog.

I looked around for some documentation about the HFS+ filesystems, and found a nice introduction by infosecaddicts.com and a more detailed description at dubeiko.com. The catalog is apparently the place where the directory structure, filenames, and other metadata are stored in a single place.

This catalog is not in a fixed location (since its size can vary), but its location is noted in the so-called volume header, a fixed-size datastructure located at 1024 bytes from the start of the partition. More details (including easier to read offsets within the volume header) are provided in this example.

Looking at the volume header inside the backup, gives me:

root@debian:/mnt/recover/WD backup# dd if=backup.img bs=1024 skip=1 count=1 2> /dev/null | hd

00000000 48 2b 00 04 80 00 20 00 48 46 53 4a 00 00 3a 37 |H+.... .HFSJ..:7|

00000010 d4 49 7e 38 d8 05 f9 64 00 00 00 00 d4 49 1b c8 |.I~8...d.....I..|

00000020 00 01 24 7c 00 00 4a 36 00 00 10 00 1d 1a a8 f6 |..$|..J6........|

^^^^^^^^^^^ Block size: 4096 bytes

00000030 0e c6 f7 99 14 cd 63 da 00 01 00 00 00 01 00 00 |......c.........|

00000040 00 02 ed 79 00 6e 11 d4 00 00 00 00 00 00 00 01 |...y.n..........|

00000050 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000060 00 00 00 00 00 00 00 00 a7 f6 0c 33 80 0e fa 67 |...........3...g|

00000070 00 00 00 00 03 a3 60 00 03 a3 60 00 00 00 3a 36 |......`...`...:6|

00000080 00 00 00 01 00 00 3a 36 00 00 00 00 00 00 00 00 |......:6........|

00000090 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

000000c0 00 00 00 00 00 e0 00 00 00 e0 00 00 00 00 0e 00 |................|

000000d0 00 00 d2 38 00 00 0e 00 00 00 00 00 00 00 00 00 |...8............|

000000e0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00000110 00 00 00 00 12 60 00 00 12 60 00 00 00 01 26 00 |.....`...`....&.|

00000120 00 0d 82 38 00 01 26 00 00 00 00 00 00 00 00 00 |...8..&.........|

00000130 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00000160 00 00 00 00 12 60 00 00 12 60 00 00 00 01 26 00 |.....`...`....&.|

00000170 00 00 e0 38 00 01 26 00 00 00 00 00 00 00 00 00 |...8..&.........|

00000180 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00000400

00000110 00 00 00 00 12 60 00 00 12 60 00 00 00 01 26 00 |.....`...`....&.|

^^^^^^^^^^^^^^^^^^^^^^^ Catalog size, in bytes: 0x12600000

00000120 00 0d 82 38 00 01 26 00 00 00 00 00 00 00 00 00 |...8..&.........|

^^^^^^^^^^^ First extent size, in 4k blocks: 0x12600

^^^^^^^^^^^ First extent offset, in 4k blocks: 0xd8238

00000130 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

I have annotated the parts that refer to the catalog. The content of the catalog (just like all other files), are stored in "extents". An extent is a single, contiguous block of storage, that contains (a part of) the content of a file. Each file can consist of multiple extents, to prevent having to move file content around each time things change (e.g. to allow fragmentation).

In this case, the catalog is stored only in a single extent (since the

subsequent extent descriptors have only zeroes). All extent offsets and

sizes are in blocks of 4k byte, so this extent lives at 0xd8238 * 4k =

byte 3626205184 (~3.4G) and is 0x12600 * 4k = 294MiB long. So I backed

up the catalog by adding -i 3626205184 to ddrescue, making it skip

ahead to the location of the catalog (and then power cycled a few times

until it copied the needed 294MiB).

After backup the allocation file, I could mount the image file just fine, and navigate the directory structure. Trying to open files would mostly fail, since the most files would only read zeroes now.

I did the same for the allocation file (which tracks free blocks), the extents file (which tracks the content of files that are more fragmented and whose extent list does not fit in the catalog) and the attributes file (not sure what that is, but for good measure).

Afterwards, I wanted to continue copying from where I previously left

off, so I tried passing -i 0 to ddrescue, but it seems this can only

be used to skip ahead, not back. In the end, I just edited the logfile,

which is just a textfile, to set the current position to 0. ddrescue

is smart enough to skip over blocks it already backed up (or marked as

failed), so it then continued where it previously left off.

Where are my files?

With the catalog backed up, I needed to read it to figure out what file were stored where, so I could make sure the most important files were backed up first, followed by all other files, skipping any unused space on the disk.

I considered and tried some tools for reading the catalog directly, but none of them seemed workable. I looked at hfssh from hfsutils (which crashed), hfsdebug (which is discontinued and no longer available for download), hfsinspect (which calsl itself "quite buggy").

Instead, I found the filefrag commandline utility that uses a Linux

filesystem syscall to figure out where the contents of a particular file

is stored on disk. To coax the output of that tool into a list of

extents usable by ddrescue, I wrote a oneliner shell script called

list-extents.sh:

sudo filefrag -e "$@" | grep '^ ' |sed 's/\.\./:/g' | awk -F: '{print $4, $6}'

Given any number of filenames, it produces a list of (start, size) pairs for each extent in the listed files (in 4k blocks, which is the Linux VFS native block size).

With the backup image loopback-mounted at /mnt/backup, I could then

generate an extent list for a given subdirectory using:

sudo find /mnt/backup/SomeDir -type f -print0 | xargs -0 -n 100 ./list-extents.sh > SomeDir.list

To turn this plain list of extents into a logfile usable by ddrescue, I

wrote another small script called post-process.sh, that

adds the appropriate header, converts from 4k blocks to 512-byte

sectors, converts to hexadecimal and sets the right device size (so if

you want to use this script, edit it with the right size). It is called

simply like this:

./post-process.sh SomeDir.list

This produces two new files: SomeDir.list.done, in which all of the

selected files are marked as "finished" (and all other blocks as

"non-tried") and SomeDir.list.notdone which is reversed (all selected

files are marked as "non-tried" and all others are marked as "finished").

Backing up specific files

Edit: Elmo pointed out that all the mapfile manipulation with

ddrescuelog was not actually needed if I had know about ddrescue's

--domain-mapfile option, which passes a second mapfile to ddrescue and

makes it only process blocks that are marked in that finished mapfile,

while presumably reading and updating the regular mapfile as normal.

Armed with a couple of these logfiles for the most important files on

the disk and one for all files on the disk, I used the ddrescuelog

tool to tell ddrescue what stuff to work on first. The basic idea is

to mark everything that is not important as "finished", so ddrescue will

skip over it and only work on the important files.

ddrescuelog backup.logfile --or-mapfile SomeDir.list.notdone | tee todo.original > todo

This uses the ddrescuelog --or-mapfile option, which takes my existing

logfile (backup.logfile) and marks all bytes as finished that are

marked as finished in the second file (SomeDir.list.notdone). IOW, it

marks all bytes that are not part of SomeDir as done. This generates

two copies (todo and todo.original) of the result, I'll explain why

in a minute.

With the generated todo file, we can let ddrescue run (though I used

the run.sh script instead):

# Then run on the todo file

sudo ddrescue -d /dev/sdd2 backup.img todo -v -v

Since the generation of the todo file effectively threw away

information (we can not longer see from the todo file what parts of

the non-important sectors were already copied, or had errors, etc.), we

need to keep the original backup.logfile around too. Using the

todo.original file, we can figure out what the last run did, and

update backup.logfile accordingly:

ddrescuelog backup.logfile --or-mapfile <(ddrescuelog --xor-mapfile todo todo.original) > newbackup.logfile

Note that you could also use SomeDir.list.done here, but actually

comparing todo and todo.original helps in case there were any errors in

the last run (so the error sectors will not be marked as done and can be

retried later).

With backup.logfile updated, I could move on to the next

subdirectories, and once all of the important stuff was done, I did the

same with a list of all file contents to make sure that all files were

properly backed up.

But wait, there's more!

Now, I had the contents of all files backed up, so the data was nearly

safe. I did however find that the disk contained a number of

hardlinks, and/or symlinks, which did not work. I did not dive

into the details, but it seems that some of the metadata and perhaps

even file content is stored in a special "metadata directory", which is

hidden by the Linux filesystem driver. So my filefrag-based "All

files"-method above did not back up sufficient data to actually read

these link files from the backup.

I could have figured out where on disk these metadata files were stored and do a backup of that, but then I still might have missed some other special blocks that are not part of the regular structure. I could of course back up every block, but then I would be copying around 1000GB of mostly unused space, of which only a few MB or GB would actually be relevant.

Instead, I found that HFS+ keeps an "allocation file". This file contains a single bit for each block in the filesystem, to store whether the block is allocated (1) or free (0). Simply looking a this bitmap and backing up all blocks that are allocated should make sure I had all data, and only left unused blocks behind.

The position of this allocation file is stored in the volume header, just like the catalog file. In my case, it was stored in a single extent, making it fairly easy to parse.

The volume header says:

00000070 00 00 00 00 03 a3 60 00 03 a3 60 00 00 00 3a 36 |......`...`...:6|

^^^^^^^^^^^^^^^^^^^^^^^ Allocation file size, in bytes: 0x12600000

00000080 00 00 00 01 00 00 3a 36 00 00 00 00 00 00 00 00 |......:6........|

^^^^^^^^^^^ First extent size, in 4k blocks: 0x3a36

^^^^^^^^^^^ First extent offset, in 4k blocks: 0x1

This means the allocation file takes up 0x3a36 blocks (of 4096 bytes of 8 bits each, so it can store the status of 0x3a36 * 4k * 8 = 0x1d1b0000 blocks, which is rounded up from the total size of 0x1d1aa8f6 blocks).

First, I got the allocation file off the disk image (this uses bash arithmetic expansion to convert hex to decimal, you can also do this manually):

dd if=/dev/backup of=allocation bs=4096 skip=1 count=$((0x3a36))

Then, I wrote a small python script

parse-allocation-file.py to parse the

allocate file and output a ddrescue mapfile. I started out in bash, but

that got tricky with bit manipulation, so I quickly converted to Python.

The first attempt at this script would just output a single line for

each block, to let ddrescuelog merge adjacent blocks, but that would

produce such a large file that I stopped it and improved the script to

do the merging directly.

cat allocation | ./parse-allocation-file.py > Allocated.notdone

This produces an Allocated.notdone mapfile, in which all free blocks

are marked as "finished", and all allocated blocks are marked as

"non-tried".

As a sanity check, I verified that there was no overlap between the non-allocated areas and all files (i.e. the output of the following command showed no done/rescued blocks):

ddrescuelog AllFiles.list.done --and-mapfile Allocated.notdone | ddrescuelog --show-status -

Then, I looked at how much data was allocated, but not part of any file:

ddrescuelog AllFiles.list.done --or-mapfile Allocated.notdone | ddrescuelog --show-status -

This marked all non-allocated areas and all files as done, leaving a whopping 21GB of data that was somehow in use, but not part of any files. This size includes stuff like the volume header, catalog, the allocation file itself, but 21GB seemed a lot to me. It also includes the metadata file, so perhaps there's a bit of data in there for each file on disk, or perhaps the file content of hard linked data?

Nearing the end

Armed with my Allocated.notdone file, I used the same commands as before

to let ddrescue backup all allocated sectors and made sure all data

was safe.

For good measure, I let ddrescue then continue backing up the

remainder of the disk (e.g. all unallocated sectors), but it seemed the

disk was nearing its end now. The backup speed (even during the "fast"

first 30 seconds) had dropped to under 300kB/s, so I was looking at a

couple of more weeks (and thousands of powercycles) for the rest of the

data, assuming the speed did not drop further. Since the rest of the

backup should only be unused space, I shut down the backup and focused

on the recovered data instead.

What was interesting, was that during all this time, the number of reallocated sectors (as reported by SMART) had not increased at all. So it seems unlikely that the slowness was caused by bad sectors (unless the disk firmware somehow tried to recover data from these reallocated sectors in the background and locked up itself in the process). The slowness also did not seem related to what sectors I had been reading. I'm happy that the data was recovered, but I honestly cannot tell why the disk was failing in this particular way...

In case you're in a similar position, the scripts I wrote are available for download.

So, with a few days of work, around a week of crunch time for the hard disk and about 4,000 powercycles, all 1000GB of files were safe again. Time to get back to some real work :-)

You can use the ddrescue -m option to provide a 'domain' mapfile that tells ddrescue to only work on that part of the disk. This avoids all the mapfile manipulation youo had to go through.

Thanks for the tip, had I realized that option existed, that would have saved quite a fiddling with mapfiles. I've added a remark to my post about this!

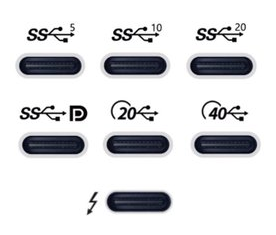

After I recently ordered a new laptop, I have been looking for a USB-C-connected dock to be used with my new laptop. This turned out to be quite complex, given there are really a lot of different bits of technology that can be involved, with various (continuously changing, yes I'm looking at you, USB!) marketing names to further confuse things.

As I'm prone to do, rather than just picking something and seeing if it works, I dug in to figure out how things really work and interact. I learned a ton of stuff in a short time, so I really needed to write this stuff down, both for my own sanity and future self, as well as for others to benefit.

I originally posted my notes on the Framework community forum, but it seemed more appropriate to publish them on my own blog eventually (also because there's no 32,000 character limit here :-p).

There are still quite a few assumptions or unknowns below, so if you have any confirmations, corrections or additions, please let me know in a reply (either here, or in the Framework community forum topic).

Parts of this post are based on info and suggestions provided by others on the Framework community forum, so many thanks to them!

Getting started

First off, I can recommend this article with a bit of overview and history of the involved USB and Thunderbolt technolgies.

Then, if you're looking for a dock, like I was, the Framework community forum has a good list of docks (focused on Framework operability), and Dan S. Charlton published an overview of Thunderbolt 4 docks and an overview of USB-C DP-altmode docks (both posts with important specs summarized, and occasional updates too).

Then, into the details...

Lines, lanes, channels, duplex, encoding and bandwidth

- The transmission of high-speed signals almost exclusively used "differential pairs" for encoding. This means two wires/pins are used to transmit a single signal in a single direction (at the same time). In this post, I will call one such pair a "line".

- A single line can either be simplex (unidirectional, transmitting in the same direction all the time) or half-duplex (alternating between directions).

- Two lines (one for each direction) can be combined to form a full-duplex channel, where data can be transmitted in both directions simultaneously. Sometimes (e.g. in USB, PCIe) such a pair of lines (so 4 wires in total) is referred to as a "(full duplex) lane", but since the term "lane" is also used to refer to a single unidirectional wire pair (e.g. in DisplayPort), I'll use "full duplex channel" for this instead.

- I'll still sometimes use "lane" when referring to specific protocols, but I'll try to qualify what I mean exactly then.

- A line will be operated at a specific bitrate, which is the same as the available bandwidth for that line. Multiple lines can be combined, leading to higher total bandwidth (but the same bitrate).

- To really compare different technologies, it is often useful to look at the bitrate used on the individual lines, for which I'll use Gbps-per-line below. I'll use "total bandwidth" refer to the sum of all the lines used in either direction, and "full duplex bandwidth" means the bandwidth that can be used in both directions at the same time.

- Most of the numbers shown here are for bits on the wire. However, that does not represent the amount of actual data being sent. For example, SATA, PCIe 2.0, USB 3.0, HDMI, and DisplayPort 1.4 transmit 10 bits on the wire for every 8 bits of data, otherwise known as 8b/10b encoding. This means USB 3.0 is more like 4 Gbps instead of 5 Gbps. This data includes protocol overhead, such as framing, headers, error correction, etc. so the effective data transfer rates (i.e. data written to disk) is lower still.

USB1/2/3

- USB1/2: single half-duplex pair up to 480Mbs half-duplex.

- USB1/2 link setup happens using pullups/pulldowns on the datalines, always starting up at USB1 speeds (low-speed/full-speed) and then using USB data messages to coordinate the upgrade to USB2 speeds (hi-speed).

- When a device using USB1 speeds ("low speed" or "full speed") is connected to a USB2 hub, the difference in speed is translated using a "Transaction Translator". Most hubs have a single TT for the entire hub, meaning that when multiple such devices are connected, only one of them can be transmitting at the same time, and they have to share the 12Mbps (full speed) bandwidth amongst them. In practice, this can sometimes lead to issues, especially with devices that need guaranteed bandwidth, like USB audio devices. Note that this applies even to modern devices that support USB2, but only implement the slower transmission speed (like often audio devices, mice, keyboard, etc.). Some hubs have multiple TTs, meaning each downstream port has the full 12Mbps available, making multi-TT hubs a better choice (but unfortunately, manufacturers usually do not specify this, you can use

lsusb -vto tell). - USB3.0: Adds two extra lines at 5Gbps-per-line (== 1 full-duplex channel, 10Gbps total bandwidth) using a new "USB3" connector with 4 extra data wires.

- USB3 is a separate protocol from USB1/2 and works exclusively over the newly added lines. This means that a USB3 hub is essentially two fully separate devices in one (USB1/2 hub and USB3 hub) and USB3 traffic and USB1/2 traffic can happen at the same time over the same cable independently.

- USB3.0 link setup uses "LFPS link training" pulses, where both sides just start to transmit on their TX line and listen on their RX line). [source].

- USB3.0 over a USB-C connector only uses 2 of the 4 high-speed lines available in the connector and cable.

- USB3.1 increases single line speed to 10Gbps (with appropriate cabling), for 20Gbps total bandwidth.

- USB3.2 allows use of all 4 high-speed lines in a USB-C connection at the same 10Gbps-per-line speed of 3.1, for 40Gbps total bandwidth.

DisplayPort

[source]

- Displayport is essentially a high-speed 4 line unidirectional connection (main link) for display and audio data, plus one lower speed half-duplex auxilliary channel.

- The auxilliary channel is used for things like EDID (detecting supported resolution) and CEC (controlling playback and power state of devices). Deciding the link speed to use on the high-speed lines is done using "link training" on those lines themselves, not using the aux channel. [source]

- Unlike VGA/DVI/HDMI, Displayport is based on data packets (similar to ethernet), rather than a continuous (clocked) stream of data on specific pins.

- Displayport speed evolved over time: 4x1.62=6.48Gbps for DP1/RBR, 4x2.7=10.8Gbps for DP1/HBR, 4x5.4=21.6Gbps for DP1.2/HBR2, 4x8.1=32.4Gbps for DP1.3/HBR3, 4x10=40Gbps for DP2.0/UHBR10, 4x13.5=54Gbps for DP2.0/UHBR13.5, 4x20=80Gbps for DP2.0/UHBR20. All versions use the same 4-line connection, just driving more data over the same wires (needing better quality cables). [source]

- Displayport 1.2 introduced optional Display Stream Compression (DSC), which is a "visually lossless" compression that can reduce bandwidth usage by a factor 3. Starting with DisplayPort 2.0, supporting DSC is mandatory.

- Displayport 1.2 introduced Multi-Stream Transport (MST), which allow sending data for multiple displays (up to 63 in theory) over a single DP link (multiplexed inside the datastream, so without having to dedicate lines to displays). Such an MST signal can then be split by an MST hub (often a 2-port hub inside a display to allow daisy-chaining displays). MST is also possible for DP inside an USB-C or thunderbolt connection. It can also use DSC (compression) on the input and uncompressed on the output, to drive displays that do not support compression. [source]

- Displayport dualmode (aka DP++) allows sending out HDMI/DVI signals from a displayport connector. This essentially just repurposes pins on the DP connector to transfer HDMI/DVI signals, so can be used with a passive adapter or cable (though the adapter does need to do voltage level shifting). This only works to connect a DP source to a HDMI/DVI sink (display), not the other way around. This also repurposes two additional CONFIG1/2 pins from being grounded to carry HDMI/DVI signals, so dual-mode is not available on an USB-C DP alt mode connection. [source]

- Some Displayport devices support Adaptive Sync, where the display refresh rate is synchronized with the GPU framerate. This seems to still work when DisplayPort is tunneled through Thunderbolt or USB4, but often breaks when going through an MST hub (though some explicitly document support for it and probably do work). [source]

Thunderbolt 1/2/3

[source]

- Thunderbolt 1/2 is an Apple/Intel specific protocol that reuses the mini-displayport connector to transfer both PCIe (for e.g. fast storage or external GPUs) and DP signals using four 10Gbps high-speed lines (40Gbps total bandwidth) configured as two 10Gbps full-duplex channels (TB1) or a single 20Gbps full-duplex channel (TB2). [source].

- It is a bit unclear to me how these signals are multiplexed. Apparently TB2 combines all four lines into a single full-duplex channel, suggesting that on TB1 there are two separate channels, but does that mean that one channel carries PCIe and one carries DP on TB1? Or each device connected is assigned to (a part of) either channel?

- TB1/2 are backwards compatible with DP, so you can plug in a non-TB DP display into a TB1/2 port. This uses a multiplexer to connect the port to either the TB controller or the GPU. This mux is controlled by snooping the signal lines and detecting if the signals look like DP or TB. In early Light Ridge controllers, this was external circuitry, from Cactus Ridge all this (including the mux) was integrated in the TB controller. [source: comments below]

- TB1/2/3 ports can be daisychained with up to 6 devices in the chain. Hubs (i.e. a split in the connection) are not supported until TB4. The last device in the chain can be a non-TB DP display as well. [source]

- USB (1/2/3) over thunderbolt is not supported directly, but is implemented by putting a full USB-host controller in a thunderbolt hub/dock that is then controlled by the host through PCIe-over-thunderbolt.

- Thunderbolt 3 is an upgrade of TB2 that uses an USB-C connector instead of mini-displayport. It uses 4 high-speed lines at 20Gbps-per-line (double of TB2), to get 40Gbps full-duplex, or 80Gbps total bandwidth (and maybe also the sidechannel SBU line for link management or so, but I could not find this). [source]

- Thunderbolt 4 seems to be essentially just USB4 with all optional items implemented (but USB4 is more like TB3 than USB3...).

- TB1/2/3 are only implemented by Intel chips, and TB1/2 is used almost exclusively by Apple. TB4/USB4 can be implemented by other chip makers too, but this has not happened yet I believe.

USB-C

[source]

- USB-C is a specification of a connector, intended to use for USB by default, but also for other protocols (usually in addition to USB) and not tied to any particular USB version.

- USB-C has a huge number of pins (24 pins), consisting of:

- 4 VBUS and 4 GND pins to allow delivering more current,

- 4 high-speed lines (8 pins), typically used as two full-duplex channels,

- USB2 D+/D- pins (duplicated, to allow reverse insertion without additional circuitry)

- Two CC/VCONN pins for low-speed connection setup, USB-PD negotiation, altmode setup, querying the cable, etc.

- Two SBU sidechannel pins as an additional lower speed line.

- USB-C cables come in different flavors: [source]

- The simplest cables have just power and USB2 pins, and do not support anything other than USB1/2. Cables that do connect all pins are apparently called "full-featured", though these might still not support the higher (10/20Gbps-per-line) speeds.

- Cables can be passive (direct copper connections), or active (with electronics in the cable to repeat/amplify a signal to allow higher speeds and longer cables).

- Active cables typically seem to be geared towards (and/or limited to) a particular signalling scheme and/or direction. This means that they are usually not usable for protocols they are not explicitly designed for. This partially comes down to the bitrates used, but also the exact low-level signalling protocol (e.g. USB3 Gen2, USB4 Gen2 and DP UHBR 10 all use 10Gbps-per-line, but use different encodings, error correction and/or direction, meaning active cables might support one, but not the other).

- Passive cables can generally be used more flexibly, mainly limited to a certain bitrate based on cable quality and length.

- Cables can also contain a "e-marker" chip that can be queried using USB-PD messages on the CC pin, containing info on the cable's spec. Such a chip is required for 5A operation (all other cables are expected to allow up to 3A) and for all USB4 (even the lower-speed 10Gbps-per-line USB4) operation.

- Cables with "e-marker" chip but no signal conditioning are still considered passive cables.

- USB4/TB4 requires an e-marker chip in the cable (falling back to USB3 or TB3 without it). Passive cables (including passive TB3 cables) can always be used for USB4 (with the exact speed depending on the cable rating), while active cables can only be used when they are rated for USB4 (so active USB3 cables cannot be used for USB4, but active USB4 cables can be used for USB3). [source]

- USB-C uses the CC pins for detecting a connection and deciding how to set it up. A host (or dowstream facing port or sourcing port) has pullups on both CC pins (value depens on available current), a device (or upstream facing port or sinking port) has pulldowns on both pins (value is fixed). Together this forms a voltage divider that allows detecting a connection and maximum current. A cable connects only one of the CC pins (leaving the other floating, or pulling it down with a different resistor value) which allows detecting the orientation of the cable. [source]

- After initial connection, the CC pin is also used for other management communication, such as USB-PD (including setting up alt modes and USB4), or vendor-specific extensions.

- The other CC pin (pulled down inside the cable) is repurposed as VCONN and used to power any active circuitry (including the e-marker) inside the cable.

- USB-C can provide up to 15W (5V×3A) on the VBUS pins with passive negotiation using resistors on the CC pins. USB-PD uses active negotiation using data messages on the CC pin and allows up to 100W (20V×5A) of power on the VBUS pins and allows reversing the power direction as well (i.e. allow a laptop USB-C port to receive power instead of supplying it).

USB-C alt modes

- USB-C alt modes allow using the pins (in particular the 4 high-speed lines and the lower speed SBU line) for other purposes.

- Alt modes are negotiated actively through the CC pin using USB-PD VDM messages. Once negotiated, this allows using these pins different physical signalling, including different directions. This means that e.g. a USB-C-to-DP adapter using DP alt mode can be mostly a passive device, though it does need some logic for the USB-PD negotiation and might need some switches to disconnect the wires until the alt mode is enabled.

- Alt modes are single-hop only (hubs might connect upstream and downstream alt mode channels, but there is no protocol to configure this AFAICT).

- Alt modes are technically separate from the USB protocol (version) supported (i.e. they can be configured without having to do USB communication), but the bandwidth capabilities does introduce some correlation (i.e. DP alt mode 2.0 supports DP 2.0 and might need up to 20Gbps-per-line, so effectively needs a USB4-capable controller, cable and hub/dock).

- DP alt mode (and probably others) can use 1, 2, or all 4 of the high-speed lines (plus the SBU line for the aux channel). When using only 1 or 2 lines, the 2 other lines can still be used for USB3 traffic (just using 1 line for USB is not possible, USB3/4 always needs a full-duplex channel, so 2 or 4 lines). In all cases, the USB2 line can still be used for USB1/2.

Because in DP alt mode all four lines can be used unidirectionally (unlike USB, which is always full-duplex), this means the effective bandwidth for driving a display can be twice as much in alt mode than when tunneling DP over USB4 or using DisplayLink over USB3.

In practice though, DP-altmode on devices usually supports only DP1.4 (HBR3 = 8.1Gbps-per-line) for 4x8.1 = 32.4Gbps of total and unidirectional bandwidth, which is less than TB3/4 with its 20Gbps-per-line for 2x20 = 40Gbps of full-duplex bandwidth. This will change once devices start support DP-altmode 2.0 (UHBR20 = 20Gbps-per-line) for 4x20=80Gbps of unidirectional bandwidth.

- It seems that DP alt mode can only be combined with USB3, not TB3 or USB4 (USB4 and DP alt mode both need the SBU pins). Since DP can be tunneled over TB/USB4, there is not much need for DP alt mode when using TB/USB4, except that it could potentially have allowed more bandwidth (2x20Gbps unidirectional DP2.0 plus 1x20Gbps full-duplex TB/USB4).

- HDMI alt mode exists, but is apparently not actually implemented by anyone. USB-C-to-HDMI adapters typically seem to use DP alt mode combined with an active DP-to-HDMI converter. I'm assuming this also holds for the Framework HDMI expansion cards. [source]

- Thunderbolt 3 is also implemented as an alt mode.

- Audio Adapter Accessory Mode allows sending analog audio data over an USB-C connector (using the USB2 and sideband pins). This looks like another alt mode, but instead of active negotiation over the CC pin, this mode is detected by just grounding both CC pins so adapters for this mode can be completely passive. [source]

- Ethernet or PCIe are sometimes mentioned as alt modes, but apparently do not actually exist. [source]

USB4 / Thunderbolt 4

[source] and [source] and [source]

- USB4 is again really different from USB3 and looks more like Thunderbolt 3 than USB3.

- USB4 does not specify any device types by itself (like USB1/2/3), but is a generic bulk data transport that allows tunneling of other protocols, like PCIe, DisplayPort and USB3.

- USB4 uses the 4 USB-C high-speed lines at up to 20Gbps-per-line for 40Gbps full-duplex and 80Gbps total bandwidth.

- USB4 link setup happens using USB-PD messages over the CC pins, falling back to USB3 setup if no USB4 link is detected within a second or so (so also in that sense USB4 is also more like Thunderbolt 3 alt mode than USB3). [source]

- USB4 also uses two additional pins (sideband channel) of the USB-C connector for further link initialization and management, so it needs USB-C and cannot be used over USB3-capable USB-A/USB-B/USB-micro connectors and cables. [source]

- Like with TB3, this tunneling happens on a data level: The physical layer signalling is defined by USB4 and different tunneled protocols are mixed in the same bitstream (unlike USB-C alt mode, where individual lines are completely allocated to some alt mode, which also determines the physical signalling used). This also means that DP alt mode and USB4-tunneled-displayport are two different ways to transfer DP over a USB4-capable connection.

- Unlike with TB3, USB2 is not tunneled but still ran in parallel over the USB2 pins.

- Unlike with TB3, USB3 is tunneled directly over USB4 and uses the host USB3 controller with only USB3 hubs in docks (though I guess docks could still choose to include PCIe connected USB controllers). In some cases, this might result in lower total USB bandwidth (10 or 20Gbps shared by all devices) compared to the TB3 approach of (sometimes multiple) USB3 controllers in the dock that are accessed through tunneled PCIe.

- USB4 end devices have most features optional. USB4 hosts require 10Gbps-per-line, tunneled 10Gbps USB3, tunneled DP, and DP altmode (unsure if this requires DP altmode 2.0), leaving 20Gbps-per-line, tunneled 20Gbps USB3, tunneled PCIe, TB3 and other altmodes optional. USB4 hubs require everything, except 20Gbps USB3 and other altmodes. [source]

- Thunderbolt 4 is (unlike thunderbolt 3) not really a protocol by itself, but is essentially just USB4 with all or most optional features enabled, and with additional certification of compatibility and performance. In particular, TB4 ports must support 20Gbps-per-line, computer-to-computer networking, PCIe, charging through at least one port, wake-up from sleep, 2x4K@60 or 1x8K@60 display, up to 2m passive cables. I have not been able to find the actual specification to see what these things really mean technically (i.e. are these display resolutions required over USB4, or altmode (2.0), must they be uncompressed, from which states does this wakeup wake up, etc.). [source] and [source]

- TB1/2/3 were propietary protocols, implemented only on Intel chips. USB4 (and also TB4) are (somewhat) more open and can be implemented by multiple vendors (though I could find the USB4 spec easily, but not the TB4 spec, or the TB3 spec which is required for compatibility, or the DP alt mode spec, which is required for USB4 ports).

- USB4 hubs must also support the TB3 for compatibility, controllers and devices may support TB3. [source]

- Different USB3/4 transfer modes have been renamed repeatedly (i.e. USB3.0 became USB3.1 gen1 and then USB3.2 gen1x1). Roughly, gen1 (aka SuperSpeed aka SuperSpeed USB) means 5Gbps-per-line, gen2 (aka SuperSpeed+ aka SuperSpeed USB 10Gbps, or SuperSpeed USB 20Gbps aka USB4 20Gbps for gen2x2) means 10Gbps-per-line, gen3 (aka USB4 40Gbps) means 20Gbps per line. No suffix or x1 means using only one pair of lines, x2 means two pairs of lines (so e.g. gen2x2 uses all four lines, at 10Gbps-per-line, for 20Gbps full-duplex and 40Gbps of total bandwidth). Also, you can mostly ignore the USB versions (i.e. USB3.1 gen1 and USB3.2 gen1x1 are the same transfer mode), except for USB4 gen2, which is also 10Gbps-per-line, but uses different encoding. [source]