These are the ramblings of Matthijs Kooijman, concerning the software he hacks on, hobbies he has and occasionally his personal life.

Most content on this site is licensed under the WTFPL, version 2 (details).

Questions? Praise? Blame? Feel free to contact me.

My old blog (pre-2006) is also still available.

See also my Mastodon page.

- Raspberry pi powerdown and powerup button (45)

- Repurposing the "Ecobutton" to skip spotify songs using Linux udev/hwdb key remapping (3)

- How to resize a cached LVM volume (with less work) (2)

- Reliable long-distance Arduino communication: RS485 & MODBUS? (6)

- USB, Thunderbolt, Displayport & docks (21)

| Sun | Mon | Tue | Wed | Thu | Fri | Sat |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 |

(...), Arduino, AVR, BaRef, Blosxom, Book, Busy, C++, Charity, Debian, Electronics, Examination, Firefox, Flash, Framework, FreeBSD, Gnome, Hardware, Inter-Actief, IRC, JTAG, LARP, Layout, Linux, Madness, Mail, Math, MS-1013, Mutt, Nerd, Notebook, Optimization, Personal, Plugins, Protocol, QEMU, Random, Rant, Repair, S270, Sailing, Samba, Sanquin, Script, Sleep, Software, SSH, Study, Supermicro, Symbols, Tika, Travel, Trivia, USB, Windows, Work, X201, Xanthe, XBee

&

&

(With plugins: config, extensionless, hide, tagging, Markdown, macros, breadcrumbs, calendar, directorybrowse, feedback, flavourdir, include, interpolate_fancy, listplugins, menu, pagetype, preview, seemore, storynum, storytitle, writeback_recent, moreentries)

Valid XHTML 1.0 Strict & CSS

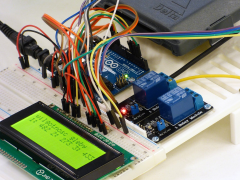

For a customer, I've been looking at RS-485 and MODBUS, two related protocols for transmitting data over longer distances, and the various Arduino libraries that exist to work with them.

They have been working on a project consisting of multiple Arduino boards that have to talk to each other to synchronize their state. Until now, they have been using I²C, but found that this protocol is quite susceptible to noise when used over longer distances (1-2m here). Combined with some limitations in the AVR hardware and a lack of error handling in the Arduino library that can cause the software to lock up in the face of noise (see also this issue report), makes I²C a bad choice in such environments.

So, I needed something more reliable. This should be a solved problem, right?

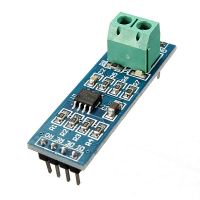

RS-485

A commonly used alternative, also in many industrial settings, are RS-485 connections. This is essentially an asynchronous serial connection (e.g. like an UART or RS-232 serial port), except that it uses differential signalling and is a multipoint bus. Differential signalling means two inverted copies of the same signal are sent over two impedance-balanced wires, which allows the receiver to cleverly subtract both signals to cancel out noise (this is also what ethernet and professional audio signal does). Multipoint means that there can be more than two devices on the same pair of wires, provided that they do not transmit at the same time. When combined with shielded and twisted wire, this should produce a very reliable connection over long lengths (up to 1000m should be possible).

However, RS-485 by itself is not everything: It just specifies the physical layer (the electrical connections, or how to send data), but does not specify any format for the data, nor any way to prevent multiple devices from talking at the same time. For this, you need a data link or arbitration protocol running on top of RS-485.

MODBUS

A quick look around shows that MODBUS is very commonly used protocol on top of RS-485 (but also TCP/IP or other links) that handles the data link layer (how to send data and when to send). This part is simple: There is a single master that initiates all communication, and multiple slaves that only reply when asked something. Each slave has an address (that must be configured manually beforehand), the master needs no address.

MODBUS also specifies a simple protocol that can be used to read and write addressed bits ("Coils" and "Inputs") and addressed registers, which would be pretty perfect for the usecase I'm looking at now.

Finding an Arduino library

So, I have some RS-485 transceivers (which translate regular UART to RS-485) and just need some Arduino library to handle the MODBUS protocol for me. A quick Google search shows there are quite a few of them (never a good sign). A closer look shows that none of them are really good...

There are some more detailed notes per library below, but overall I see the following problems:

- Most libraries are very limited in what serial ports they can use.

Some are hardcoded to a single serial port, some support running on

arbitrary

HardwareSerialinstances (and sometimes alsoSoftwareSerialinstances, but only two librares actually supports running on arbitraryStreaminstances (while this is pretty much the usecase thatStreamwas introduced for). - All libraries handle writes and reads to coils and registers automatically by updating the relevant memory locations, which is nice. However, none of them actually support notifying the sketch of such reads and writes (one has a return value that indicates that something was read or written, but no details), which means that the sketch should continuously check all register values and update them library. It also means that the number of registers/coils is limited by the available RAM, you cannot have virtual registers (e.g. writes and reads that are handled by a function rather than a bit of RAM).

- A lot of them are either always blocking in the master, or require manually parsing replies (or both).

Writing an Arduino library?

Ideally, I would like to see a library:

- That can be configured using a Stream instance and an (optional) tx

enable pin.

- Has a separation between the MODBUS application protocol and the

RS-485-specific datalink protocol, so it can be extended to other

transports (e.g. TCP/IP) as well.

- Where the master has both synchronous (blocking) and asynchronous request

methods.

The xbee-arduino library, which also does serial request-response handling would probably serve as a good example of how to combine these in a powerful API. - Where the slave can have multiple areas defined (e.g. a block of 16 registers starting at address 0x10). Each area can have some memory allocated that will be read or written directly, or a callback function to do the reading or writing. In both cases, a callback that can be called after something was read or writen (passing the area pointer and address or something) can be configured too. Areas should probably be allowed to overlap, which also allows having a "fallback" (virtual) area that covers all other addresses.

These areas should be modeled as objects that are directly accessible to the sketch, so the sketch can read and write the data without having to do linked-list lookups and without needing to know the area-to-adress mapping. - That supports sending and receiving raw messages as well (to support custom function codes). - That does not do any heap allocation (or at least allows running with static allocations only). This can typically be done using static (global) variables allocated by the sketch that are connected as a linked list in the library.

I suspect that given my requirements, this would mean starting a new library from scratch (using an existing library as a starting point would always mean significant redesigning, which is probably more work than its worth). Maybe some parts (e.g. specific things like packet formatting and parsing) can be reused, though.

Of course, I do not really have time for such an endeavor and the customer for which I started looking at this certainly has no budget in this project for such an investment. This means I will probably end up improvising with the MCCI library, or use some completely different or custom protocol instead of MODBUS (though the Arduino library offerings in this area also seem limited...). Maybe CANBus?

However, if you also find yourself in the same situation, maybe my above suggestions can serve as inspiration (and if you need this library and have some budget to get it written, feel free to contact me).

Existing libraries

So, here's the list of libraries I found.

https://github.com/arduino-libraries/ArduinoModbus

- Official library from Arduino.

- Master and slave.

- Uses the RS485 library to communicate, but does not offer any way to pass a custom RS485 instance, so it is effectively hardcoded to a specific serial port.

- Offers only single value reads and writes.

- Slave stores value internally and reads/writes directly from those, without any callback or way to detect that communication has happened.

https://github.com/4-20ma/ModbusMaster

- Master-only library.

- Latest commit in 2016.

- Supports any serial port through Stream objects.

- Supports idle/pre/post-transmission callbacks (no parameters), used to enable/disable the transceiver.

- Supports single and multiple read/writes.

- Replies are returned in a (somewhat preprocessed) buffer, to be further processed by the caller.

https://github.com/andresarmento/modbus-arduino

- Slave-only library.

- Last commit in 2015.

- Supports single and multiple read/writes.

- Split into generic ModBus library along with extra transport-specific libraries (TCP, serial, etc.).

- Supports passing HardwareSerial pointers and (with a macro modification to the library) SoftwareSerial pointers (but uses a Stream pointer internally already).

- Slave stores values in a linked list (heap-allocated), values are written through write methods (linked list elements are not exposed directly, which is a pity).

- Slave reads/writes directly from internal linked list, without any callback or way to detect that communication has happened.

- https://github.com/vermut/arduino-ModbusSerial is a fork that has some Due-specific fixes.

https://github.com/lucasso/ModbusRTUSlaveArduino

- Fork of https://github.com/Geabong/ModbusRTUSlaveArduino (6 additional commits).

- Slave-only-library.

- Last commit in 2018.

- Supports passing HardwareSerial pointers.

- Slave stores values external to the library in user-allocate arrays. These arrays are passed to the library as "areas" with arbitrary starting addresses, which are kept in the library in a linked list (heap-allocated).

- Slave reads/writes directly from internal linked list, without any callback or way to detect that communication has happened.

https://github.com/mcci-catena/Modbus-for-Arduino

- Master and slave.

- Last commit in 2019.

- Fork of old (2016) version of https://github.com/smarmengol/Modbus-Master-Slave-for-Arduino with significant additional development.

- Supports passing arbitrary serial (or similar) objects using a templated class.

- Slave stores values external to the library in a single array (so all requests index the same data, either word or bit-indexed), which is passed to the poll() function.

- On Master, sketch must create requests somewhat manually (into a struct, which is encoded to a byte buffer automatically), and replies returns raw data buffer on requests. Requests and replies are non-blocking, so polling for replies is somewhat manual.

https://github.com/angeloc/simplemodbusng

- Master and slave.

- Last commit in 2019.

- Hardcodes Serial object, supports SoftwareSerial in slave through duplicated library.

- Supports single and multiple read/writes of holding registers only (no coils or input registers).

- Slave stores values external to the library in a single array, which is passed to the update function.

https://github.com/smarmengol/Modbus-Master-Slave-for-Arduino

- Master and slave.

- Last commit in 2020.

- Supports any serial port through Stream objects.

- Slave stores values external to the library in a single array (so all requests index the same data, either word or bit-indexed), which is passed to the poll() function.

- On Master, sketch must create requests somewhat manually (into a struct, which is encoded to a byte buffer automatically), and replies returns raw data buffer on requests. Requests and replies are non-blocking, so polling for replies is somewhat manual.

https://github.com/asukiaaa/arduino-rs485

- Unlike what the name suggests, this actually implements ModBus

- Master and slave.

- Started very recently (October 2020), so by the time you read this, maybe things have already improved.

- Very simple library, just handles modbus framing, the contents of the modbus packets must be generated and parsed manually.

- Slave only works if you know the type and length of queries that will be received.

- Supports working on

HardwareSerialobjects.

https://gitlab.com/creator-makerspace/rs485-nodeproto

- This is not a MODBUS library, but a very thin layer on top of RS485 that does collision avoidance and detection that can be used to implement a multi-master system.

- Last commit in 2016, repository archived.

- This one is notable because it gets the Stream-based configuration right and seems well-written. It does not implement MODBUS or a similarly high-level protocol, though.

https://github.com/MichaelJonker/HardwareSerialRS485

- Also not MODBUS, but also a collision avoidance/detection scheme on top of RS485 for multi-master bus.

- Last commit in 2015.

- Replaces HardwareSerial rather than working on top, requiring a

customized

boards.txt.

https://www.airspayce.com/mikem/arduino/RadioHead/

- This is not a MODBUS library, but a communication library for data communication over radio. It also supports serial connections (and is thus an easy way to get framing, checksumming, retransmissions and routing over serial).

- Seems to only support point-to-point connections, lacking an internal way to disable the RS485 driver when not transmitting (but maybe it can be hacked internally).

Update 2020-06-26: Added smarmengol/Modbus-Master-Slave-for-Arduino to the list

Update 2020-10-07: Added asukiaaa/arduino-rs485 to the list

Or: Forcing Linux to use the USB HID driver for a non-standards-compliant USB keyboard.

For an interactive art installation by the Spullenmannen, a friend asked me to have a look at an old paint mixing terminal that he wanted to use. The terminal is essentially a small computer, in a nice industrial-looking sealed casing, with a (touch?) screen, keyboard and touchpad. It was by "Lacour" and I think has been used to control paint mixing machines.

They had already gotten Linux running on the system, but could not get the keyboard to work and asked me if I could have a look.

The keyboard did work in the BIOS and grub (which also uses the BIOS), so we know it worked. Also, the BIOS seemed pretty standard, so it was unlikely that it used some very standard protocol or driver and I guessed that this was a matter of telling Linux which driver to use and/or where to find the device.

Inside the machine, it seemed the keyboard and touchpad were separate devices, controlled by some off-the-shelf microcontroller chip (probably with some custom software inside). These devices were connected to the main motherboard using a standard 10-pin expansion header intended for external USB ports, so it seemed likely that these devices were USB ports.

Closer look at the USB devices

And indeed, looking through lsusb output I noticed two unkown devices

in the list:

# lsusb

Bus 002 Device 003: ID ffff:0001

Bus 002 Device 002: ID 0000:0003

(...)

These have USB vendor ids of 0x0000 and 0xffff, which I'm pretty sure are not official USB-consortium-assigned identifiers (probably invalid or reserved even), so perhaps that's why Linux is not using these properly?

Running lsusb with the --tree option allows seeing the physical port

structure, but also shows which drivers are bound to which interfaces:

# lsusb --tree

/: Bus 02.Port 1: Dev 1, Class=root_hub, Driver=uhci_hcd/2p, 12M

|__ Port 1: Dev 2, If 0, Class=Human Interface Device, Driver=usbhid, 12M

|__ Port 2: Dev 3, If 0, Class=Human Interface Device, Driver=, 12M

(...)

This shows that the keyboard (Dev 3) indeed has no driver, but the

touchpad (Dev 2) is already bound to usbhid. And indeed, runnig cat

/dev/input/mice and then moving over the touchpad shows that some

output is being generated, so the touchpad was already working.

Looking at the detailed USB descriptors for these devices, shows that they are both advertised as supporting the HID interface (Human Interface Device), which is the default protocol for keyboards and mice nowadays:

# lsusb -d ffff:0001 -v

Bus 002 Device 003: ID ffff:0001

Device Descriptor:

bLength 18

bDescriptorType 1

bcdUSB 2.00

bDeviceClass 255 Vendor Specific Class

bDeviceSubClass 0

bDeviceProtocol 0

bMaxPacketSize0 8

idVendor 0xffff

idProduct 0x0001

bcdDevice 0.01

iManufacturer 1 Lacour Electronique

iProduct 2 ColorKeyboard

iSerial 3 SE.010.H

(...)

Interface Descriptor:

bLength 9

bDescriptorType 4

bInterfaceNumber 0

bAlternateSetting 0

bNumEndpoints 1

bInterfaceClass 3 Human Interface Device

bInterfaceSubClass 1 Boot Interface Subclass

bInterfaceProtocol 1 Keyboard

(...)

# lsusb -d 0000:00003 -v

Bus 002 Device 002: ID 0000:0003

Device Descriptor:

bLength 18

bDescriptorType 1

bcdUSB 2.00

bDeviceClass 0

bDeviceSubClass 0

bDeviceProtocol 0

bMaxPacketSize0 8

idVendor 0x0000

idProduct 0x0003

bcdDevice 0.00

iManufacturer 1 Lacour Electronique

iProduct 2 Touchpad

iSerial 3 V2.0

(...)

Interface Descriptor:

bLength 9

bDescriptorType 4

bInterfaceNumber 0

bAlternateSetting 0

bNumEndpoints 1

bInterfaceClass 3 Human Interface Device

bInterfaceSubClass 1 Boot Interface Subclass

bInterfaceProtocol 2 Mouse

(...)

So, that should make it easy to get the keyboard working: Just make sure

the usbhid driver is bound to it and that driver will be able to

figure out what to do based on these descriptors. However, apparently

something is preventing this binding from happening by default.

Looking back at the USB descriptors above, one interesting difference is

that the keyboard has bDeviceClass set to "Vendor

specific", whereas the touchpad has it set to 0, which

means "Look at interface descriptors. So that seems the

most likely reason why the keyboard is not working, since "Vendor

Specific" essentially means that the device might not adhere to any of

the standard USB protocols and the kernel will probably not start using

this device unless it knows what kind of device it is based on the USB

vendor and product id (but since those are invalid, these are unlikely

to be listed in the kernel).

Binding to usbhid

So, we need to bind the keyboard to the usbhid driver. I know of two

ways to do so, both through sysfs.

You can assign extra USB vid/pid pairs to a driver through the new_id

sysfs file. In this case, this did not work somehow:

# echo ffff:0001 > /sys/bus/usb/drivers/usbhid/new_id

bash: echo: write error: Invalid argument

At this point, I should have stopped and looked up the right syntax used

for new_id, since this was actually the right approach, but I was

using the wrong syntax (see below). Instead, I tried some other stuff

first.

The second way to bind a driver is to specify a specific device, identified by its sysfs identifier:

# echo 2-2:1.0 > /sys/bus/usb/drivers/usbhid/bind

bash: echo: write error: No such device

The device identifier used here (2-2:1.0) is directory name below

/sys/bus/usb/devices and is, I think, built like

<bus>-<port>:1.<interface> (where 1 might the configuration?). You can

find this info in the lsusb --tree output:

/: Bus 02.Port 1: Dev 1, Class=root_hub, Driver=uhci_hcd/2p, 12M

|__ Port 2: Dev 3, If 0, Class=Human Interface Device, Driver=, 12M

I knew that the syntax I used for the device id was correct, since I

could use it to unbind and rebind the usbhid module from the touchpad.

I suspect that there is some probe mechanism in the usbhid driver that

runs after you bind the driver which tests the device to see if it is

compatible, and that mechanism rejects it.

How does the kernel handle this?

As I usually do when I cannot get something to work, I dive into the source code. I knew that Linux device/driver association usually works with a driver-specific matching table (that tells the underlying subsystem, such as the usb subsystem in this case, which devices can be handled by a driver) or probe function (which is a bit of driver-specific code that can be called by the kernel to probe whether a device is compatible with a driver). There is also configuration based on Device Tree, but AFAIK this is only used in embedded platforms, not on x86.

Looking at the usbhid_probe() and

usb_kbd_probe() functions, I did not see any

conditions that would not be fulfilled by this particular USB device.

The match table for usbhid also only matches the

interface class and not the device class. The same goes for the

module.alias file, which I read might also be involved (though I am

not sure how):

# cat /lib/modules/*/modules.alias|grep usbhid

alias usb:v*p*d*dc*dsc*dp*ic03isc*ip*in* usbhid

So, the failing check must be at a lower level, probably in the usb subsystem.

Digging a bit further, I found the usb_match_one_id_intf() function,

which is the core of matching USB drivers to USB device interfaces. And

indeed, it says:

/* The interface class, subclass, protocol and number should never be

* checked for a match if the device class is Vendor Specific,

* unless the match record specifies the Vendor ID. */

So, the entry in the usbhid table is being ignored since it matches

only the interface, while the device class is "Vendor Specific". But how

to fix this?

A little but upwards in the call stack, is a bit of code that matches a

driver to an usb device or interface. This has two

sources: The static table from the driver source code, and a dynamic

table that can be filled with (hey, we know this part!) the new_id

file in sysfs. So that suggests that if we can get an entry into this

dynamic table, that matches the vendor id, it should work even with a

"Vendor Specific" device class.

Back to new_id

Looking further at how this dynamic table is filled, I found the code

that handles writes to new_id, and it parses it input

like this:

fields = sscanf(buf, "%x %x %x %x %x", &idVendor, &idProduct, &bInterfaceClass, &refVendor, &refProduct);

In other words, it expects space separated values, rather than just a colon separated vidpid pair. Reading on in the code shows that only the first two (vid/pid) are required, the rest is optional. Trying that actually works right away:

# echo ffff 0001 > /sys/bus/usb/drivers/usbhid/new_id

# dmesg

(...)

[ 5011.088134] input: Lacour Electronique ColorKeyboard as /devices/pci0000:00/0000:00:1d.1/usb2/2-2/2-2:1.0/0003:FFFF:0001.0006/input/input16

[ 5011.150265] hid-generic 0003:FFFF:0001.0006: input,hidraw3: USB HID v1.11 Keyboard [Lacour Electronique ColorKeyboard] on usb-0000:00:1d.1-2/input0

After this, I found I can now use the unbind file to unbind the

usbhid driver again, and bind to rebind it. So it seems that using

bind indeed still goes through the probe/match code, which previously

failed but with the entry in the dynamic table, works.

Making this persistent

So nice that it works, but this dynamic table will be lost on a reboot.

How to make it persistent? I can just drop this particular line into the

/etc/rc.local startup script, but that does not feel so elegant (it

will probably work, since it only needs the usbhid module to be loaded

and should work even when the USB device is not known/enumerated yet).

However, as suggested by this post, you can also

use udev to run this command at the moment the USB devices is "added"

(i.e. enumerated by the kernel). To do so, simply drop a file in

/etc/udev/rules.d:

$ cat /etc/udev/rules.d/99-keyboard.rules

# Integrated USB keyboard has invalid USB VIDPID and also has bDeviceClass=255,

# causing the hid driver to ignore it. This writes to sysfs to let the usbhid

# driver match the device on USB VIDPID, which overrides the bDeviceClass ignore.

# See also:

# https://unix.stackexchange.com/a/165845

# https://github.com/torvalds/linux/blob/bf3bd966dfd7d9582f50e9bd08b15922197cd277/drivers/usb/core/driver.c#L647-L656

# https://github.com/torvalds/linux/blob/3039fadf2bfdc104dc963820c305778c7c1a6229/drivers/hid/usbhid/hid-core.c#L1619-L1623

ACTION=="add", ATTRS{idVendor}=="ffff", ATTRS{idProduct}=="0001", RUN+="/bin/sh -c 'echo ffff 0001 > /sys/bus/usb/drivers/usbhid/new_id'"

And with that, the keyboard works automatically at startup. Nice :-)

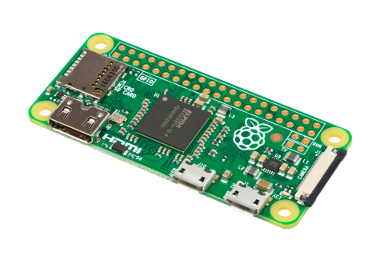

TL;DR: This post describes an easy way to add a power button to a raspberryp pi that:

- Only needs a button and wires, no other hardware components.

- Allows graceful shutdown and powerup.

- Only needs modification of config files and does not need a dedicated daemon to read GPIO pins.

There are two caveats:

- This shuts down in the same way as

shutdown -h noworhaltdoes. It does not completely cut the power (like some hardware add-ons do). - To allow powerup, the I²C SCL pin (aka GPIO3) must be used, conflicting with externally added I²C devices.

If you use Raspbian stretch 2017.08.16 or newer, all that is required is

to add a line to /boot/config.txt:

dtoverlay=gpio-shutdown,gpio_pin=3

Make sure to reboot after adding this line. If you need to use a

different gpio, or different settings, lookup gpio-shutdown in the

docs.

Then, if you connect a pushbutton between GPIO3 and GND (pin 5 and 6 on the 40-pin header), you can let your raspberry shutdown and startup using this button.

If you use an original Pi 1 B (non-plus) revision 1.0 (without mounting

holes), pin 5 will be GPIO1 instead of GPIO3 and you will need to

specify gpio_pin=1 instead. The newer revision 2.0 (with 2 mounting

holes in the board) and all other rpi models do have GPIO3 and work as

above.

All this was tested on a Rpi Zero W, Rpi B (rev 1.0 and 2.0) and a Rpi B+.

If you have an older Raspbian version, or want to know how this works, read on below.

Powering down your pi

To gracefully power down a Raspberry Pi (or any Linux system), it has to be shut down before cutting power. On the rpi, which has no power button, this means using SSH to log in and running a shutdown command. Or, as everybody usually does, cutting the power and accepting the risk of data corruption.

Googling around shows a ton of solutions to this problem, where a button is connected to a GPIO pin. The GPIO pin is monitored and when it changes, a shutdown command is given. However, all of these seem to involve a custom daemon, usually written in Python, to monitor the GPIO pin. A separate daemon just for handling the powerbutton does not sound great, and if it is not apt-get installable, it seems too fragile for my tastes. Ideally, this should be handled by standard system components only.

Another thing is that a lot of these tutorials recommend wiring a pullup or pulldown resistor along with the button, while the raspberry pi has builtin pullups and pulldowns on all of its I/O pins which can just as easily be used.

It turns out a combination of systemd power key handling, combined with the kernel gpio-keys driver and devicetree overlay can be used to handle graceful shutdown completely with existing system components.

Shutdown using systemd-logind

Having a shutdown button connected to a GPIO pin is probably not so

uncommon, so I Googled some more. I came across the

70-power-switch.rules udev file from systemd-logind, which adds the

"power-switch" tag to some kernel event sources representing dedicated

power buttons. Since v225 does this for selected gpio sources as

well (commit). A bit of digging shows that:

- The udev rules add the "power-switch" tag to selected devices. Udev does not use these tags itself, but other programs can.

- The "power-switch" udev tag causes

systemd-logindto open the event source as a possible source of power events. - When a

KEY_POWERkeypress is received,systemd-logindinitiates a shutdown. It also handles some suspend-related keys. - Since last week,

70-power-switch.rulestags all event sources as "power-switch" (commit) and letssystemd-logindfigure out which could potentially fireKEY_POWERevents (commit) and monitor only those (though this change does not really change things for this usecase).

Linux gpio-keys driver

So, how to let a GPIO pin generate a KEY_POWER event? The first logind

commit linked above has an example device tree snippet

that sets up the kernel gpio-keys driver which does

exactly that: Generate key events based on gpio changes.

Normally, the devicetree is fixed when compiling the kernel, but using a

devicetree overlay (see the Rpi device tree docs) we can

insert extra configuration at boot time. I found an

example that ties multiple buttons to GPIO pins and

configures them as a tiny keyboard. Then I found an example in the the

gpio-button package that does the same with a single

button and also shows how to configure a pulldown on the pin.

I've created a devicetree overlay based on that example, that

sets up the gpio-keys driver with a single GPIO to trigger a

KEY_POWER event. The overlay is just a text file and I added elaborate

comments, so look inside if you are curious.

This overlay was merged into the official kernel repository and is shipped in Rasbpian images from 2017.08.16 and onwards. See below for instructions on manually compiling and installing this overlay on older images.

Waking up

With the above, you get a shutdown button: Press it to initiate a clean shutdown procedure, after which the raspberry pi will turn off (note that it does not completely cut power, that would require additional hardware. Instead, the system goes into a very deep sleep configuration while still drawing some current).

After shutdown, starting the system back up means removing and reinserting the USB cable to momentarily cut power. This begs the question: Can you not start the system again through one of the GPIO pins?

I found one post that pointed out the RUN pin, present on Rpi 2 and up, which can be shorted to GND to reset the CPU (without clean shutdown!), but can also power the system up. However, having two buttons, along with the risk of accidentally pressing reset instead of shutdown did not seem appealing to me.

After more digging I found a forum post saying that

shorting GPIO3 or GPIO1 to GND wakes up the Rpi after shutdown. Official

docs on this feature seem to be lacking, I found one note on the

elinux.org wiki saying bootcode.bin (version

12/04/2012 and later) puts the system in sleep mode, and starts the

system when GPIO1 or GPIO3 goes low.

Conveniently, all Rpi versions either expose GPIO3 or GPIO1 at pin 5 of their 26-pin or 40-pin header, so that pin can always be used for waking up.

Since GPIO3/GPIO1 is also a normal IO pin, this is perfect: By configuring the shutdown handling on GPIO3 or GPIO1, the same button can be used to shutdown and wake up. Note that GPIO3 and GPIO1 are also an I²C pin, so if you need to also connect I²C-devices, another pin must be used for shutdown and you cannot use the wakeup feature. Also note that GPIO3 (and GPIO2) have external pullups at least on the Rpi Zero.

Setting this up on older Rasbpian versions

On Rasbpian versions older than 2018.08.16, two additional steps need to be taken:

Older images do not include the overlay yet. You will need to get and build the DT overlay yourself. Download the devicetree overlay file. The easiest is to run wget on the raspberry pi itself:

$ wget http://www.stderr.nl/static/files/Hardware/RaspberryPi/gpio-shutdown-overlay.dtsThen compile the devicetreefile:

$ dtc -@ -I dts -O dtb -o gpio-shutdown.dtbo gpio-shutdown-overlay.dtsIgnore any "

Warning (unit_address_vs_reg): Node /fragment@0 has a unit name, but no reg property" messages you might get.Copy the compiled file to

/boot/overlayswhere the loader can find it:$ sudo cp gpio-shutdown.dtbo /boot/overlays/Older images run a systemd version older than v225 (check with

systemd --version), which only handlesKEY_POWERevents from specific devices. To make it work withgpio-keysas well, create a file called/etc/udev/rules.d/99-gpio-power.rulescontaining these lines:ACTION!="REMOVE", SUBSYSTEM=="input", KERNEL=="event*", SUBSYSTEMS=="platform", \ DRIVERS=="gpio-keys", ATTRS{keys}=="116", TAG+="power-switch"

Update 2018-04: I restructured this post to reflect that Rasbpian has merged the needed changes and make the instructions focus on making things work with a recent Rasbpian image (while keeping the extended instructions for older images at the bottom).

Update 2019-01: Turns out the orginal Pi1 B rev1.0 has a different pinout, so include instructions for that. Thanks to P Lindgren for suggesting and testing this.

Update 2019-07: It seems that the Pi4 bootloader does not currently support wakeup using GPIO3. This is expected to be changed in the near future (bootloader version RC3.3 has the fix) so newly manufactured Pi4 boards have this fixed), but you might need to update the bootloader (using the recovery process) of the pi4 if you have one with the older version. See this thread for more details. Thanks to Tyler for noticing this.

Sadly this did not work for me. However, the solution at 8bitjunkie did if you want to compare notes.

Hm, pity. I wonder what part is failing exactly (the link you gave has a completely different software approach, so hard to compare). If you want to help out debugging this, drop me an e-mail at matthijs@stdin.nl and I'll be happy to suggest some tests to see what is failing exactly.

Matthijs, I've tried many solutions with no luck. It works for me now that I followed your directions. You were right on all accounts, the RPi device-tree page you linked above is very helpful and systemd 215 did require 99-gpio-power.rules. Initially skipped over this step because I assumed it was optional. I should learn to read and follow directions :) Thank you for sharing this. - Jess

I've been looking for a simple solution for a while, I'm quite a noob, but it looks simple enough to achieve, thanks a lot! I have only one question, to be sure, I would only need a jumber cable and a power button (one in this kit for example www.amazon.fr/Elegoo-Composants-Electronique-R%C3%A9sistances-Potentiometre/dp/B01N0D3KTP/ref=sr12?s=computers&ie=UTF8&qid=1503046063&sr=1-2&th=1)?

That's correct, you basically only need something that can momentarily connect two pins together. A screwdriver would suffice, but two jumperwires (or one cut in half soldered to the button) and a pushbutton would be more obvious :-)

Thanks for the fast reply, I'll try this asap!

does the dt overlay collide with i2c? I want both. On this project ill probably resort to an on off ic but not having i2c if you want this functionality with out ic is kinda a deal breaker. pi doesnt have a lot of io to begin with

Yeah, this overlay collides with I²C. I haven't tried myself, but got a report from someone that ran into problems. Note that you can easily switch to another pin for the shutdown feature, you'll just lose the button-based power-on (but if you really need it, you could wire a separate reset button to the RUN pin, which should also power on after power off).

What would happen if your switch was latching?

I have s nice LED lit one around that would be perfect for this but feel it might not work in this case?

I believe that when the pi is powered on, it behaves like a keyboard key, so it sends a keydown when the switch is closed and a keyup when the switch is opened. I'm not sure what systemd responds to, probably both. When the pi is powered down, I believe it only responds to a falling edge, so the switch closing.

This means that if you use a latching switch, things might just work - close the switch to power up, open the switch to generate a keyup and power down. It could be that a keyup is not sufficient, or that on startup a keydown is generated. In that case, it might be needed to set the active_low flag to 0, so you get a keydown on a rising edge instead. One risk of this approach is that the pi state and the button state might become out of sync, but that should be easily fixable by just toggling the button once or twice.

I bit the bullet an bought a push-to-make button anyway. All working great until i tried to add a seperate GPIO pin for a Power LED.

I added; dtoverlay=gpio-poweroff,gpiopin=6,active_low

But this seemed to cause some kind of clash? No longer did my button (using GPIO3 as suggested) all me to power back on (although shutdown continued to work fine)

Any thoughts?

@Dom, I'm not entirely sure what you're saying, but if you change the pin number in the overlay, that only influences the shutdown, the power-on is hardcoded in the bootloader (or something like that). For you it seems to be reversed, which I can't quite explain?

Also, note that active_low should normally get a value of 0 or 1, and it defaults to 1 (active low). To make it active high, use active_low=0.

Thank you mate!

Working in my RPi without the udev rule.

Raspberry Pi 3 B

Raspbian Stretch with kernel 4.9.41-v7+ SystemD 232

I need to make the directory overlays inside /boot/

Pi ZeroW with Stretch. This seems to shutdown kind-of (ssh and other servers become unreachable, even usb otg serial console dies) but it still responds to ping, then won't start up again. Any ideas?

@Simon, interesting, seems like the shutdown fails somehow. Did you install extra packages that might interfere? Does it work with a clean stretch system? Does a normal shutdown from the commandline (shutdown -h now and systemctl poweroff) work? Anything interesting in the syslogs? Perhaps redirect the primary console to serial (with the console= kernel commandline option, not sure how to set that or if that works with an USB-otg serial port) and see if anything shows there?

Is there a way to insert a delayed reaction to the button pressed? I do not want it to shut down if I accidentally hit the button, it should be pressed for e.g. 1 sec?

Looking at the gpio-keys documentation, it does not support a "delayed press": www.kernel.org/doc/Documentation/devicetree/bindings/input/gpio-keys.txt

However, IIRC the gpio-keys driver does generate proper keydown and keyup events, so systemd could, in theory, detect the length of a press and require a minimum length before shutting down the system. I do not think systemd supports this now, but perhaps the maintainers would be willing to add it. You could see if there's a feature request for this already at github.com/systemd/systemd/issues and, if not, add one? If you find or add one, please post the link here!

Worked perfectly! Thank you so much for the solution, the explanation, and for taking the time to link this page in other forums so that it could be found.

I have three questions please:

Pin 5 is quite sensitive. Just attaching or removing a small wire which is not connected to anything else causes the pi to shut down or start up. Can something be done to make this pin less sensitive?

I am powering the pi with a battery and charging it a circuit which is capable of completely removing power from the pi after your shutdown process has completed it's work. A URL to the charging device is as follows: learn.adafruit.com/adafruit-powerboost-1000c-load-share-usb-charge-boost?view=all. In order to tell the charger to kill power I need a pin on the pi which goes from 3.5 volt to 0 volts after your shutdown process has run. Is there a way to make a pin behave this way? If so, please tell me how.

I am running the GDM3 display manager because it is the only login screen I have found which incorporates a virtual onscreen keyboard. If I have started the pi but have not yet logged in and I short pins 5 and 6 together then your process will blank the screen but will not shut down the pi. Shorting the wires again immediately returns the login screen. My question is: Is it safe to completely remove power when the pi is in this condition or must I first find a way to execute the shut down command.

Any further guidance you can give would be greatly appreciated. Thanks again for all your help. John

@John, I'll try answering your questions.

The pin should have a pullup resistor (internal to the CPU and external because of I²C) that prevents it from being super-sensitive. Perhaps your using a raspberry pi without an external pullup? In any case, using a smaller pullup (which needs more current to drop 3.3V) could help there. Typical values are between 1k and 100k. You could try 1k (or even smaller, but the smaller the pullup, the more current flows when you press the button).

I believe there is a "gpio-poweroff" kernel module and overlay for this usecase, but I haven't looked at it at all.

The handling of the power button normally happens in systemd. I suspect that GDM3 "inhibits" the power key handling in systemd and then handles it in a different way itself. Perhaps you could configure GDM3 to not handle the power keys at all, or to handle the power key by doing a shutdown just like systemd normally does?

Hi Matthijs Sorry for the late response. It has taken me some time to absorb all the information you have given us. Truly everything we need to work with overlays is referenced in your post.

Thanks for explaining how to use a pull up resistor.

The gpio-poweroff overlay worked perfectly. It was already on the pi in the overlays directory. I called it in config.txt right after calling your overlay.

dtoverlay=gpio-poweroff,gpiopin=26,active_low

Directions for using all the overlays found in the overlays directory are in the README which is found in the same overlays directory.

For some reason the gpio-poweroff overlay interferes with restart functionality of pin 5 but that doesn't matter for what I am doing. It still halts the pi just fine.

I am now researching the problem where the overlays don't seem to load until after GDM3 login or as you suggest, the overlay behavior is overridden by GDM at the systemd level. I will report back if a solution is found.

Thanks again for all your help.

This mechanism works well on a CM3+CMIO3. One question: you mentioned in an earlier comment "the power-on is hardcoded in the bootloader". Do you have any more information regarding this? Is there no way to configure the GPIO pin for powerup? I cannot use GPIO1 or GPIO3 in my application because both are irrevocably tied to other functions. I am able to powerup by grounding the RUN pin, but would be nice to have shutdown as well as powerup on the same GPIO.

> Do you have any more information regarding this? Is there no way to configure the GPIO pin for powerup?

All info I have is linked in the post above. AFAIK, the wakeup pin is hardcoded in bootcode.bin, for which no sources are available. However, re-reading the forum post linked above, it says "I've now added a way to wake the board through GPIO.", which suggests that whoever wrote that does have access to the bootloader source. Perhaps you could try asking him or her (or other official rpi engineers) about this? If you find out anything, please report back here!

I did ask on RPi forums, and this is the reply from the engineer (dom) who originally added the wakeup support:

"The low power mode following "halt" is achieved by not powering on a number of power domains/clocks. That means no access to sdcard and hence no support for configuration files to choose wakeup pin."

So I guess you are restricted to using GPIO1/3 for wakeup.

Thank you for making this available, it works much better than the python solutions I've tried.

Enjoyed this mod, had it working for some time. Is there a way to gracefully close firefox when I press the sleep button to powerdown the pi? At the moment, it crashes & requires manual intervention on startup to restore the previous session.

George, I would actually expect this to gracefully shutdown processes already. The kernel sends out a shutdown signal, similarly to when you press the powerbutton on a regular PC. Systemd picks this up by initiating a system shutdown, I believe in the same way as when you would run shutdown -h now.

I would expect this to gracefully terminate all processes, only resorting to forcibly killing if things do not terminate quickly enough. Here's some ideas:

Maybe your Firefox somehow does not respond to the terminate signal, or not fast enough? Maybe systemd can be configured to wait a bit longer?

Maybe this kills the X server which cause Firefox to crash abnormally before it can gracefully terminate?

You could try

shutdown -h nowand/orsystemctl poweroffand see if the same problem happens there?Is there anything in the system logs after the shutdown? Maybe some error messages from Firefox?

Let me know if you find anything!

I have successfully followed these instructions to shut down my Raspberry Pi 400 when I short pins 5 and 5, but am unable to get my Pi to wake when I short pins 5 and 6.

I tried installing the latest version of the bootloader using raspi-config and checked my eeprom file to see that WAKE_ON_GPIO is set to 1....I also tried setting it to 2 as the docs say that "Pi 400 has a dedicated power button which operates even if the processor is switched off. This behaviour is enabled by default, however, WAKE_ON_GPIO=2 may be set to use an external GPIO power button instead of the dedicated power button." Still no luck.

Any ideas why I cannot start my pi by shorting pins 5 and 6 or ideas for debugging this?

Hm, I have not much idea about the Pi 400. I mostly investigated the shutdown part of things, the power-on part is handled by the closed-source bootloader, so not much to debug on.

However, searching for the WAKE_ON_GPIO you mention, I find "... will run in a lower power mode until either GPIO3 or GLOBAL_EN are shorted to ground", so that suggests the bootloader on pi4/pi400 uses GPIO3 like most other pi boards.

However, looking at the pi400 pinout, it looks like the pinout is different, and GPIO3 is not at pin 5, but at pin 15! Maybe shorting pin 15 to GND (which is not at pin 16, btw) would work to power on again?

Let me know if this helps, then I can update my post with some extra info :-)

Thanks for getting back to me so quickly. I tried connecting pin 15 to pin 6 (ground) and was unable to shutdown or start up.

When I look for the pin layout for pi400 there's conflicting info out there which is making life difficult!

I installed the Python library gpiozero and ran "pinout" to get a look at the GPIO pin layout. It shows GPIO3 on pin 5.

I ran "gpio readall" and it shows GPIO 3 on pin 15.

All that being said, I'm fairly certain that pin 5 is GPIO3 on my board since:

I can shutdown my pi by shorting pins 5 and 6 once I set dtoverlay=gpio-shutdown,gpio_pin=3 in /boot/config.txt.

I looked at /proc/cpuinfo and it lists my Hardware as BCM2711. "pinout" shows GPIO on pin 5 and shows the hardware as BCM2711.

So, I'm back where I started: I can shutdown by shorting pins 5 and 6 but cannot start back up.

Bummer, I had hoped this would solve things.

I agree with your assessment that you probably need pin 5, but only based on your first argument (that the gpio-shutdown overlay works with gpio_pin=3).

Your second argument probably does not hold: The BCM2711 just refers to the chip, but which chip pin is routed to which expansion header is determined by the PCB layout, so is not necessarily dictated by the chip used.

Having said that: I have no further suggestions, unfortunately. Maybe you could ask on the raspberry pi forum, I think there are some people there with access to the bootloader source code, which might be able to verify how things are intended to work...

So glad to see this thread is still active :) I'd like to confirm something if I could: As I understand your reply of 2017-08-22 19:21, the dtoverlay for gpio-shutdown must be on gpio_pin=3 (a.k.a. I2C SCL) to achieve both power up and power down using a single switch - is that still the case today?

> the dtoverlay for gpio-shutdown must be on gpio_pin=3 (a.k.a. I2C SCL) to achieve both power up and power down using a single switch - is that still the case today?

AFAIK that is indeed still the case. The power-up behavior is hardcoded in the bootloader, the dtoverlay does not configure this is in any way (AFAIK the power-up pin is not configurable, if it would be, that would need to happen in config.txt).

Re GPIO 3 as the sole means of startup: That seems to agree with the docs, and other things I've read. However, I've seen a recent claim that it's been done using GPIO 21 (no URL as my last post was rejected as spam?!). THe only thing that occurs to me is that with the firmware and hardware being proprietary - we cannot know for certain. :(

> However, I've seen a recent claim that it's been done using GPIO 21

Oh, that would be interesting, as it would free up GPIO3 to be used for I²C.

> (no URL as my last post was rejected as spam?!)

Yeah, the spamfilter rejects all post that contain links, since that turned out to reject almost all spam. If you want to post a link, just post it without the protocol prefix (i.e. just www.example.org), which works well enough to be used by humans (and I often edit the comment manually to make the link clickable again).

@Seamus, thanks for the link. Reading that thread, it seems someone has made startup using GPIO21 working on a rpi 4B, but the report is a bit vague. If anyone has a 4B and can verify that GPIO21 does indeed work (and maybe also check if GPIO3 also works), I'll update my post to reflect that.

I had hoped to get some feedback from my answer to the gentleman that made that claim - a confirmation of some sort. I'd be willing to try it on my 4B, but up to my ears with my own projects right now. Speaking of, I've prototyped & now tested a "single button on-off" circuit that works with the low power mode on the 4B (WAKE_ON_GPIO=0 and POWER_OFF_ON_HALT=1). Let me know if you're interested in seeing this - working on a "publish-able" schematic now.

> Speaking of, I've prototyped & now tested a "single button on-off" circuit that works with the low power mode on the 4B (WAKE_ON_GPIO=0 and POWER_OFF_ON_HALT=1). Let me know if you're interested in seeing this - working on a "publish-able" schematic now.

Cool, sounds good, I would be interested. Not so much for myself (I do not actually have a Rpi4), but would be a good addition to this post. If you publish your work somewhere, I'd be happy to link to it from here.

Here you are: I've linked my Name to the page on GitHub. Feel free to use any or all of it.

Good morning,

I am running a Pi 4B with Volumio installed as a music streamer. I have been trying to install a power button for several days to no avail. I have tried several different ways with no success. I have tried using physical pin 5 and 6 as well as physical pins 39 and 40. I did manage to get the power LED working off of physical pins 9 and 10 but cannot seem to get the button to work on power down. I can wake to Pi but shutdown is a no go. ANY help would be greatly appreciated! Thanks!

Hey Bob, bummer that it's not working for you. One thing I would try to confirm first is to see if the kernel gpio-keys driver is successfully loaded and generating KEY_POWER presses. The kernel startup messages (dmesg) might offer some info on this, and you can try to log keypresses (using a command like libinput debug-events, which I think should show these buttonpresses). Depending on the results, you could also try manually reading the gpio pin state to rule out hardware issues, or look into the systemd-logind logs and udev rules to see if that correctly sees the keypresses).

I hope this helps figure this out, feel free to comment more if you need more help further on :-)

@Bob McCourt, I use physical pins 39 and 40 to shutdown using the overlay, what I forgot was that the gpio number != physical number, so the line should read dtoverlay=gpio-shutdown,gpio=21 this works with a momentary pushbutton attached to a two pin header. I use a custom case, 3D printed with a dedicated hole for the button.

Is there a way to do this so that you can set it up to not be a momentary button press, more like using a switch so if you pulled 2 gpio pins to ground it would always shutdown immediately after starting as long as those 2 pins were bridged?

If you mean it should shutdown ASAP if the pins are bridge already on startup, then I'm doubtful that will work - the gpio-keys driver probably needs to see a transition to generate a keypress. One thing I could image is that autorepeat could be helpful (i.e. keep generating the same keypress as long as the key is kept "pressed"), but I suspect this will only start from an initial transition, not if the key is already "pressed" on startup.

You could try though - this is triggered by setting the autorepeat in the devicetree (see www.kernel.org/doc/Documentation/devicetree/bindings/input/gpio-keys.txt).

This requires modifying and compiling the devicetree overlay - the instructions in the "" section in the blogpost above should be helpful. The linked .dts file should be slightly modified: below the line compatible = "gpio-keys"; you should add a line autorepeat;

If that does not work, there might be other solution (different kernel drivers or something in userspace), but I have no ready suggestions.

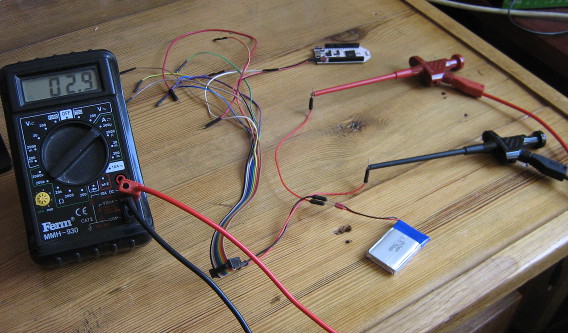

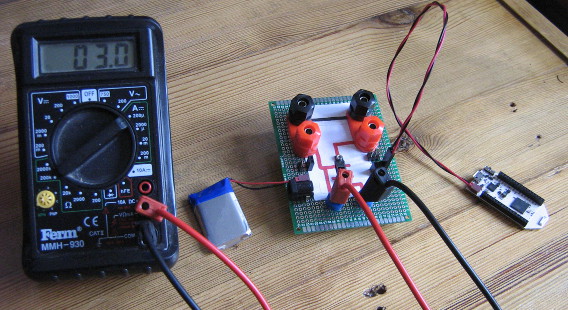

Recently, I needed to do battery current draw measurements on my Pinoccio boards. Since the battery is connected using this tinywiny JST connector, I couldn't just use some jumper wires to redirect the current flow through my multimeter. I ended up using jumper wires, combined with my Bus Pirate fanout cable, which has female connectors just small enough, to wire everything up. The result was a bit of a mess:

Admittedly, once I cleaned up all the other stuff around it from my desk for this picture, it was less messy than I thought, but still, jamming in jumper wires into battery connectors like this is bound to wear them out.

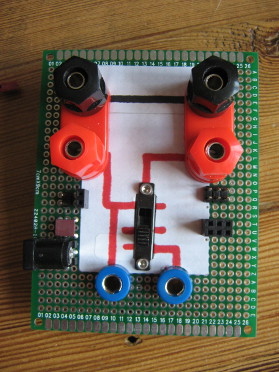

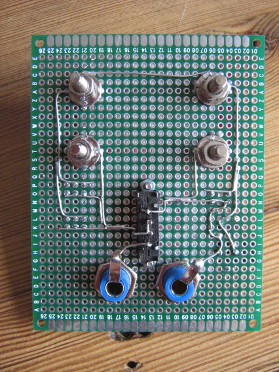

So, I ordered up some JST FSH connectors (as used by the battery) and some banana sockets and built a simple board that allows connecting a power source and a load, keeping the ground pins permanently connected, but feeding the positive pins through a pair of banana sockets where a current meter can plug in. For extra flexibility, I added a few other connections, like 2.54mm header pins and sockets, a barrel jack plug and more banana sockets for the power source and load. I just realized I should also add USB connectors, so I can easily measure current used by an USB device.

The board also features a switch (after digging in my stash, I found one old three-way switch, which is probably the first component to die in this setup. The switch allows switching between "on", "off" and "redirect through measurement pins" modes. I tried visualizing the behaviour of the pins on the top of the PCB, but I'm not too happy with the result. Oh well, as long as I know what does :-)

All I need is a pretty case to put under the PCB and a μCurrent to measure small currents accurately and I'm all set!

Update: The board was expanded by adding an USB-A and USB-B plug to interrupt USB power, with some twisted wire to keep the data lines connected, which seems to work (not shown in the image).

I was previously running an ancient Windows XP install under Virtualbox for the occasional time I needed Windows for something. However, since Debian Stretch, virtualbox is no longer supplied, due to security policy problems, I've been experimenting with QEMU, KVM and virt-manager. Migrating my existing VirtualBox XP installation to virt-manager didn't work (it simply wouldn't boot), and I do not have any spare Windows keys lying around, but I do have a Windows 7 installed alongside my Linux on a different partition, so I decided to see if I could get that to boot inside QEMU/KVM.

An obvious problem is the huge change in hardware between the real and virtual environment, but apparently recent Windows versions don't really mind this in terms of drivers, but the activation process could be a problem, especially when booting both virtually and natively. So far I have not seen any complications with either drivers or activation, not even after switching to virtio drivers (see below). I am using an OEM (preactivated?) version of Windows, so that might help in this area.

Update: When booting Windows in the VM a few weeks later, it started bugging me that my Windows was not genuine, and it seems no longer activated. Clicking the "resolve now" link gives a broken webpage, and going through system properties suggests to contact Lenovo (my laptop provider) to resolve this (or buy a new license). I'm not yet sure if this is really problematic, though. This happened shortly after replacing my hard disk, though I'm not sure if that's actually related.

Rebooting into Windows natively shows it is activated (again or still), but booting it virtually directly after that still shows as not activated...

Creating the VM

Booting the installation was actually quite painless: I just used the

wizard inside virt-manager, entered /dev/sda (my primary hard disk) as

the storage device, pressed start, selected to boot Windows in my

bootloader and it booted Windows just fine.

Booting is not really fast, but once it runs, things are just a bit sluggish but acceptable.

One caveat is that this adds the entire disk, not just the Windows partition. This also means the normal bootloader (grub in my case) will be used inside the VM, which will happily boot the normal default operating system. Protip: Don't boot your Linux installation inside a VM inside that same Linux installation, both instances will end up fighting in your filesystem. Thanks for fsck, which seems to have fixed the resulting garbage so far...

To prevent this, make sure to actually select your Windows installation in the bootloader. See below for a more permanent solution.

Installing guest drivers

To improve performance, and allow better integration, some special Windows drivers can be installed. Some of them work right away, for some of them, you need to change the hardware configuration in virt-manager to "virtio".

I initially installed some win-virtio drivers from Fedora (I used the 0.1.141-1 version, which is both stable and latest right now). However, the QXL graphics driver broke the boot, Windows would freeze halfway through the initial boot animation (four coloured dots swirling to form the Windows logo). I recovered by booting into safe mode and reverting the graphics driver to the default VGA driver.

Then, I installed the "spice-guest-tools" from spice-space.org, which again installed the QXL driver, as well as the spice guest agent (which allows better mouse integration, desktop resizing, clipboards sharing, etc.). Using this version, I could again boot, now with proper QXL drivers. I'm not sure if this is because the QXL driver was actually different (version number in the device manager / .inf file was 6.1.0.10024 in both cases I believe), or if this was because additional drivers, or the spice agent were installed now.

Switching to virtio

For additional performance, I changed the networking and storage configuration in virt-manager to "virtio", which, instead of emulating actual hardware, provides optimized interaction between Windows and QEMU, but it does require specific drivers on the guest side.

For the network driver, this was a matter of switching the device type in virt-manager and then installing the driver through the device manager. For the storage devices, I first added a secondary disk set to "virtio", installed drivers for that, then switched the main disk to "virtio" and finally removed the secondary disk. Some people suggest this since Windows can only install drivers when booted, and of course cannot boot without drivers for its boot disk.

I did all this before installing the "spice-guest-tools" mentioned above. I suspect that using that installer will already put all drivers in a place where Windows can automatically install them from, so perhaps all that's needed is to switch the config to "virtio".

Note that system boot didn't get noticably faster, but perhaps a lot of the boot happens before the virtio driver is loaded. I haven't really compared SATA vs virtio in normal operation, but it feels acceptable (but not fast). I recall that my processor does not have I/O virtualization support, so that might be the cause.

Replacing the bootloader

As mentioned, virtualizing the entire disk is a bit problematic, since it also reuses the normal bootloader. Ideally, you would only expose the needed Windows partition (which would also provide some additional protection of the other partitions), but since Windows expects a partitioned disk, you would need to somehow create a virtual disk composed of virtual partition table / boot sector merged with the actual data from the partition. I haven't found any way to allow this.

Another approach is to add a second disk with just grub on it, configured to boot Windows from the first disk, and use the second disk as the system boot disk.

I tried this approach using the Super Grub2 Disk, which is a

ready-made bootable ISO-hybrid (suitable for CDROM, USB-stick and hard

disk). I dowloaded the latest .iso file, created a new disk drive in

virt-manager and selected the iso (I suppose a CDROM drive would also

work). Booting from it, I get quite an elaborate grub menu, that detects

all kinds of operating systems, and I can select Windows through Boot

Manually.... -> Operating Systems.

Since that is still quite some work (and easy to forget when I haven't booted Windows in a while), I decided to create a dedicated tiny hard disk, just containing grub, configured to boot my Windows disk. I found some inspiration on this page about creating a multiboot USB stick and turned it into this:

matthijs@grubby:~$ sudo dd if=/dev/zero of=/var/lib/libvirt/images/grub-boot-windows.img bs=1024 count=20480

20480+0 records in

20480+0 records out

20971520 bytes (21 MB, 20 MiB) copied, 0.0415679 s, 505 MB/s

matthijs@grubby:~$ sudo parted /var/lib/libvirt/images/grub-boot-windows.img mklabel msdos

matthijs@grubby:~$ sudo parted /var/lib/libvirt/images/grub-boot-windows.img mkpart primary 2 20

matthijs@grubby:~$ sudo losetup -P /dev/loop0 /var/lib/libvirt/images/grub-boot-windows.img

matthijs@grubby:~$ sudo mkfs.ext2 /dev/loop0p1

(output removed)

matthijs@grubby:~$ sudo mount /dev/loop0p1 /mnt/tmp

matthijs@grubby:~$ sudo mkdir /mnt/tmp/boot

matthijs@grubby:~$ sudo grub-install --target=i386-pc --recheck --boot-directory=/mnt/tmp/boot /dev/loop0

matthijs@grubby:~$ sudo sh -c "cat > /mnt/tmp/boot/grub/grub.cfg" <<EOF

insmod chain

insmod ntfs

search --no-floppy --set root --fs-uuid F486E9B586E9790E

chainloader +1

boot

EOF

matthijs@grubby:~$ sudo umount /dev/loop0p1

matthijs@grubby:~$ sudo losetup -d /dev/loop0

The single partition starts at 2MB, for alignment and to leave some room

for grub (this is also common with regular hard disks nowadays). Grub is

configured to find my Windows partition based on its UUID, which I

figured out by looking at /dev/disk/by-uuid.

I added the resulting grub-boot-windows.img as a disk drive in

virt-manager (I used SATA, since I was not sure if virtio would boot,

and the performance of this disk is irrelevant anyway) and configured it

as the first and only boot disk. Booting the VM now boots Windows

directly.

For a theatre performance, I needed to make the tail lights of an old car controllable through the DMX protocol, which the most used protocol used to control stage lighting. Since these are just small incandescent lightbulbs running on 12V, I essentially needed a DMX-controllable 12V dimmer. I knew that there existed ready-made modules for this to control LED-strips, which also run at 12V, so I went ahead and tried using one of those for my tail lights instead.

I looked around ebay for a module to use, and found this one. It seems the same design is available from dozens of different vendors on ebay, so that's probably clones, or a single manufacturer supplying each.

DMX module details

This module has a DMX input and output using XLR or a modular connector, and screw terminals for 12V power input, 4 output channels and one common connection. The common connection is 12V, so the output channels sink current (e.g. "Common anode"), which is relevant for LEDs. For incandescent bulbs, current can flow either way, so this does not really matter.

Opening up the module, it seems fairly simple. There's a microcontroller (or dedicated DMX decoder chip? I couldn't find a datasheet) inside, along with two RS-422 transceivers for DMX, four AP60T03GH MOSFETS for driving the channels, and one linear regulator to generate a logic supply voltage.

On the DMX side, this means that the module has a separate input and output signals (instead of just connecting them together). It also means that the DMX signal is not isolated, which violates the recommendations of the DMX specification AFAIU (and might be problematic if there is more than a few volts of ground difference). On the output side, it seems there are just MOSFETs to toggle the output, without any additional protection.

Just try it?

I tried connecting my tail lights to the module, which worked right away, nicely dimming the lights. However:

When changing the level, a high-pitched whine would be audible, which would fall silent when the output level was steady. I was not sure whether this came from the module or the (external) power supply, but in either case this suggests some oscillations that might be harmful for the equipment (and the whine was slightly annoying as well).

Dimming LEDs usually works using PWM, which very quickly switches the LED on and off, faster than the eye can see. However, when switching an inductive load (such as a coil), a very high voltage spike can occur, when the coil current wants to continue flowing, but is blocked by the PWM transistor.

I'm not sure how much inductance a normal light bulb gives, but there will be at least a bit of it, also from the wiring. Hence, I wanted to check the voltages involved using a scope, to prevent damage to the components.

Measuring

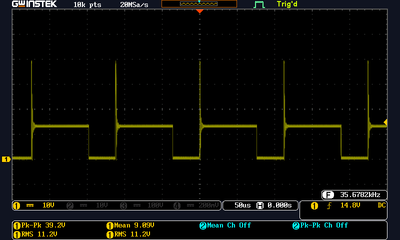

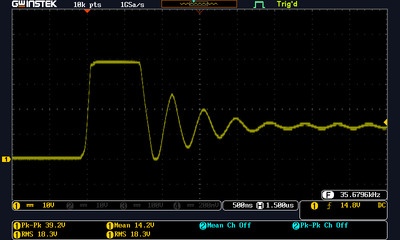

Looking at a channel output pin on a scope shows the following. The left nicely shows the PWM waveform, but also shows a high voltage pulse when the transistor is switched off (remember that the common pin is connected to 12V, so when the transistor pin is on, it sinks current and pulls the channel pin to 0V, and when it is off, current stops flowing and the pin returns to 12V). The right image shows a close-up of the high-voltage spike.

The spike is about 39V, which exceeds the maximum rating of the transistor (30V), so that is problematic. While I was doing additional testing, I also let some of the magic smoke escape (I couldn't see where exactly, probably the cap or series resistor near the regulator). I'm not sure if this was actually caused by these spikes, or I messed up something in my testing, but fortunately the module still seems to work, so there must be some smoke left inside...

The shape of this pulse is interesting, it seems as if something is capping it at 39V. I suspect this might be the MOSFET body diode that has a reverse breakdown. I'm not entirely sure if this is a problematic condition, the datasheet does not specify any ratings for it (so I suspect it is).

Adding diodes

Normally, these inductive spikes are fixed by adding a snubber diode diode. I tried using a simple 1N4001 diode, which helped somewhat, but still left part of the pulse. Using the more common 1N4148 diode helped, but it cannot handle the full current (though specs are a bit unclear when short but repetitive current surges are involved).

I had the impression that the 1N4001 diode needed too much time to turn on, so I ordered some Schottky diodes (which should be faster). I could not find any definitive info on whether this should really be needed (some say regular diodes already have turn-on times of a few ns), but it does seem using Schottkys helped.

The dimmer module supports 8A of current per channel, so I ordered some Schottkys that could handle 8A of current. Since they were huge, I settled for using 1N5819 Schottkys instead. These are only rated for 1A of current, but that is continuous average current. Since these spikes are very short, it should be able to handle higher currents during the spikes (it has a surge current rating of 25A, but that is only non-repetitive, which I'm not sure applies here...).

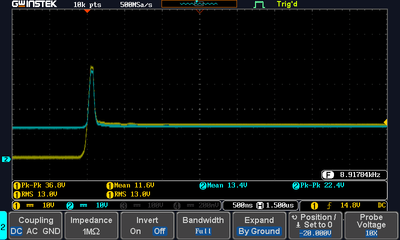

Here's what happens when adding a 1N5819:

The yellow line is the channel output, the blue line is the 12V input. As you can see, the pulse is greatly reduced in duration. However, there is still a bit of a spike left. Presumably because the diode now connects to the 12V line, the 12V line also follows this spike. To fix that, I added a capacitor between 12V and GND. I would expect that any input capacitors on the regulator would already handle this, but it seems there is a 330Ω series resistor in the 12V line to the regulator (perhaps to protect the regulator from voltage spikes)?

Adding a capacitor

This is what happens when adding a 100nF ceramic capacitor (along with the 1N5819 diode already present):

This succesfully reduces the pulse voltage, but introduces some ringing (probably resonance between the capacitance and the inductance?). Replacing with a 1uF helps slightly:

Note that I forgot to attach the blue probe here. The ringing is still present, but is now much lower in frequency. In this setup, the high-pitched whining I mentinoed before was continuously present, not just when changing the dim level.

I also tried using a 1uF electrolytic capacitor, which seems to give the best results, so I stuck to that. Here's what my final setup gives:

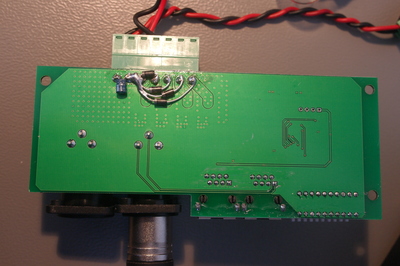

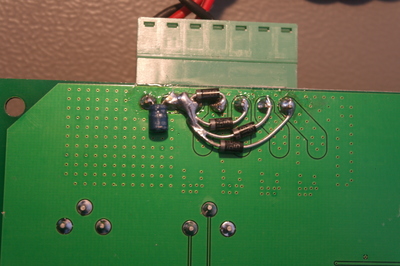

I soldered in these diodes and the cap on the bottom side of the PCB, since that's where I could access the relevant pins:

Unsolved questions

I also tested with a short LED strip, which to my surprise showed similar surges. They were a lot smaller, but the current was also a lot smaller. This might suggest that it's not the bulb itself that causes the inductive spike, but rather the wiring (even though that was only some 20-30cm) or perhaps the power supply? It also suggests that using this with a bigger LED strip, you might actually also be operating the MOSFETs outside of their specifications...

I'm also a bit surprised that I needed the capacitor on the input voltage. I wonder if there might also be some inductance on the power supply side (e.g. the power supply giving a voltage spike when the current drops)?

Finally, wat causes this difference between the electrolytic and ceramic capacitors? I know they are different, but I do not know off-hand how exactly.

Got any insights? Feel free to leave a comment or drop me a mail!

In some Arduino / C++ project, I was using a custom assert() macro, that, if

the assertion would fail show an error message, along with the current

filename and line number. The filename was automatically retrieved using

the __FILE__ macro. However, this macro returns a full path, while we

only had little room to show it, so we wanted to show the filename only.

Until now, we've been storing the full filename, and when an assert was triggered we would use the strrchr function to chop off all but the last part of the filename (commonly called the "basename") and display only that. This works just fine, but it is a waste of flash memory, storing all these (mostly identical) paths. Additionally, when an assertion fails, you want to get a message out ASAP, since who knows what state your program is in.

Neither of these is really a showstopper for this particular project,

but I suspected there would be some way to use C++ constexpr functions

and templates to force the compiler to handle this at compiletime, and

only store the basename instead of the full path. This week, I took up

the challenge and made something that works, though it is not completely

pretty yet.

Working out where the path ends and the basename starts is fairly easy

using something like strrchr. Of course, that's a runtime version, but

it is easy to do a constexpr version by implementing it recursively,

which allows the compiler to evaluate these functions at compiletime.

For example, here are constexpr versions of strrchrnul(),

basename() and strlen():

/**

* Return the last occurence of c in the given string, or a pointer to

* the trailing '\0' if the character does not occur. This should behave

* just like the regular strrchrnul function.

*/

constexpr const char *static_strrchrnul(const char *s, char c) {

/* C++14 version

if (*s == '\0')

return s;

const char *rest = static_strrchr(s + 1, c);

if (*rest == '\0' && *s == c)

return s;

return rest;

*/

// Note that we cannot implement this while returning nullptr when the

// char is not found, since looking at (possibly offsetted) pointer

// values is not allowed in constexpr (not even to check for

// null/non-null).

return *s == '\0'

? s

: (*static_strrchrnul(s + 1, c) == '\0' && *s == c)

? s

: static_strrchrnul(s + 1, c);

}

/**

* Return one past the last separator in the given path, or the start of

* the path if it contains no separator.

* Unlike the regular basename, this does not handle trailing separators

* specially (so it returns an empty string if the path ends in a

* separator).

*/

constexpr const char *static_basename(const char *path) {

return (*static_strrchrnul(path, '/') != '\0'

? static_strrchrnul(path, '/') + 1

: path

);

}

/** Return the length of the given string */

constexpr size_t static_strlen(const char *str) {

return *str == '\0' ? 0 : static_strlen(str + 1) + 1;

}

So, to get the basename of the current filename, you can now write:

constexpr const char *b = static_basename(__FILE__);

However, that just gives us a pointer halfway into the full string literal. In practice, this means the full string literal will be included in the link, even though only a part of it is referenced, which voids the space savings we're hoping for (confirmed on avr-gcc 4.9.2, but I do not expect newer compiler version to be smarter about this, since the linker is involved).

To solve that, we need to create a new char array variable that

contains just the part of the string that we really need. As happens

more often when I look into complex C++ problems, I came across a post

by Andrzej Krzemieński, which shows a technique to concatenate two

constexpr strings at compiletime (his blog has a lot of great posts on

similar advanced C++ topics, a recommended read!). For this, he has a

similar problem: He needs to define a new variable that contains the

concatenation of two constexpr strings.

For this, he uses some smart tricks using parameter packs (variadic

template arguments), which allows to declare an array and set its

initial value using pointer

references (e.g. char foo[] = {ptr[0], ptr[1], ...}). One caveat is

that the length of the resulting string is part of its type, so must be

specified using a template argument. In the concatenation case, this can

be easily derived from the types of the strings to concat, so that gives

nice and clean code.

In my case, the length of the resulting string depends on the contents of the string itself, which is more tricky. There is no way (that I'm aware of, suggestions are welcome!) to deduce a template variable based on the value of an non-template argument automatically. What you can do, is use constexpr functions to calculate the length of the resulting string, and explicitly pass that length as a template argument. Since you also need to pass the contents of the new string as a normal argument (since template parameters cannot be arbitrary pointer-to-strings, only addresses of variables with external linkage), this introduces a bit of duplication.

Applied to this example, this would look like this:

constexpr char *basename_ptr = static_basename(__FILE__);

constexpr auto basename = array_string<static_strlen(basename_ptr)>(basename_ptr); \

This uses the static_string library published along with the above

blogpost. For this example to work, you will need some changes to the

static_string class (to make it accept regular char* as well), see