These are the ramblings of Matthijs Kooijman, concerning the software he hacks on, hobbies he has and occasionally his personal life.

Most content on this site is licensed under the WTFPL, version 2 (details).

Questions? Praise? Blame? Feel free to contact me.

My old blog (pre-2006) is also still available.

See also my Mastodon page.

- Raspberry pi powerdown and powerup button (45)

- Repurposing the "Ecobutton" to skip spotify songs using Linux udev/hwdb key remapping (3)

- How to resize a cached LVM volume (with less work) (2)

- Reliable long-distance Arduino communication: RS485 & MODBUS? (6)

- USB, Thunderbolt, Displayport & docks (21)

| Sun | Mon | Tue | Wed | Thu | Fri | Sat |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 |

(...), Arduino, AVR, BaRef, Blosxom, Book, Busy, C++, Charity, Debian, Electronics, Examination, Firefox, Flash, Framework, FreeBSD, Gnome, Hardware, Inter-Actief, IRC, JTAG, LARP, Layout, Linux, Madness, Mail, Math, MS-1013, Mutt, Nerd, Notebook, Optimization, Personal, Plugins, Protocol, QEMU, Random, Rant, Repair, S270, Sailing, Samba, Sanquin, Script, Sleep, Software, SSH, Study, Supermicro, Symbols, Tika, Travel, Trivia, USB, Windows, Work, X201, Xanthe, XBee

&

&

(With plugins: config, extensionless, hide, tagging, Markdown, macros, breadcrumbs, calendar, directorybrowse, feedback, flavourdir, include, interpolate_fancy, listplugins, menu, pagetype, preview, seemore, storynum, storytitle, writeback_recent, moreentries)

Valid XHTML 1.0 Strict & CSS

For anyone that cares: I just replaced my GPG (Gnu Privacy Guard) key that I use for signing my emails and Debian uploads.

My previous key was already 9 years old and used a 1024-bit DSA key. That seemed like a good idea at the time, but for some time these small keys and signatures using SHA-1 have been considered weak and their use is discouraged. By the end of this year, Debian will be actively removing the weak keys from their keyring, so about time I got a stronger key as well (not sure why I didn't act on this before, perhaps it got lost on a TODO list somewhere).

In any case, my new key has ID A1565658 and fingerprint E7D0 C6A7

5BEE 6D84 D638 F60A 3798 AF15 A156 5658. It can be downloaded from

the keyservers, or from my own webserver (the latter includes

my old key for transitioning).

Now, I should find some Debian Developers to meet in person and sign my key. Should have taken care of this before T-Dose last year...

While trying to track down a reset bug in the Pinoccio firmware, I

suspected something was going wrong in the dynamic memory management

(e.g., double free, or buffer overflow). For this, I wrote some code to

log all malloc, realloc and free calls, as wel as a python script

to analyze the output.

This didn't catch my bug, but perhaps it will be useful to someone else.

In addition to all function calls, it also logs the free memory after

the call and shows the return address (e.g. where the malloc is called

from) to help debugging.

It uses the linker's --wrap, which allows replacing arbitrary

functions with wrappers at link time. To use it with Arduino, you'll

have to modify platform.txt to change the linker options (I hope to

improve this on the Arduino side at some point, but right now this seems

to be the only way to do this).

While setting up Tika, I stumbled upon a fairly unlikely corner case in the Linux kernel networking code, that prevented some of my packets from being delivered at the right place. After quite some digging through debug logs and kernel source code, I found the cause of this problem in the way the bridge module handles netfilter and iptables.

Just in case someone else actually finds himself in this situation and actually manages to find this blogpost, I'll detail my setup, the problem and it solution here.

Tika's network setup

Tika runs Debian wheezy, with a single network interface to the internet (which is not involved in this problem). Furthermore, Tika runs a number of lxc containers, which are isolated systems sharing the same kernel, but running a complete userspace of their own. Using kernel namespaces and cgroups, these containers obtain a fair degree of separation: Each of them has its own root filesystem, a private set of mounted filesystem, separate user ids, separated network stacks, etc.

Each of these containers then connects to the outside world using a

virtual ethernet device. This is sort of a named pipe, but then for

ethernet. Each veth device has two ends, one inside the container, and

one outside, which are connected. On the inside, it just looks like each

container has a single ethernet device, which is configured normally. On

the outside, all of these veth interfaces are grouped together into a

bridge device, br-lxc, which allows the containers to talk amongst

themselves (just as if they were connected to the same ethernet switch).

The bridge device in the host is configured with an IP address as well,

to allow communciation between the host and containers.

Now, I have a few port forwarding rules: when traffic comes in on my public IP address on specific ports, it gets forwarded to a specific container. There is nothing special about this, this is just like forwarding ports to LAN hosts on a NAT router.

A problem with port forwarding like this is that by default, packets

coming in from the internal side cannot be properly handled. As an

example, one of the containers is running a webserver, which serves a

custom Debian repository on the apt.stderr.nl domain. When another

container tries to connect to that, DNS resolution will give it the

external IP of tika, but connecting to that IP fails.

Usually, the DNAT rule used for portforwarding is configured to only

process packets from the external network. But even if it would process

internal packets, it would not work. The DNAT rule changes the

destination address of these packets to point to my web container so

they get sent to the web container. However, the source address is

unchanged. Since the containers have a direct connection (through the

network bridge) reply packets get sent directly through the original

container - the host does not have a chance to "undo" the DNAT on the

reply packets. For external connections, this is not a problem because

the host is the default gateway for the containers and the replies need

to through the host to reach the external ip.

The most common solution to this is split-horizon DNS - make sure that

all these domains resolve to the internal address of the web container,

so no port forwarding is needed. For various practical reasons, this

didn't work for me, so I settled for the other solution: Apply SNAT in

addition to DNAT, which causes the source address of the forwarded

packets to be changed to the host's address, forcing replies to pass

through the host. The Vuurmuur firewall I was using even had a

special "bounce" rule for exactly this purpose (setting up a DNAT and

SNAT iptables rule).

This setup worked perfectly - when connecting to the web container from

other containers. However, when the web container tried to connect to

itself (through the public IP address), the packets got lost. I

initially thought the packets were droppped - they went through the

PREROUTING chain as normal, but never showed up in the FORWARD

chain. I also thought the problem was caused by the packet having the

same source and destination addresses, since packets coming from other

containers worked as normal. Neither of these turned out to be true, as

I'll show below.

Simplifying the setup

Since reproducing the problem on a different and/or simpler setup is always a good approach in debugging, I tried to reproduce the problem on my laptop, using a (single) reguler ethernet device and applying DNAT and SNAT rules. This worked as expected, but when I added a bridge interface, containing just the ethernet interface, it broke again. Adding a second (vlan) interface to the bridge uncovered that the problem was not traffic DNATed back to its source, but rather traffic DNATed back to the same bridge port it originated from - traffic from one bridge port DNATed to the other worked normally.

Digging down into the kernel sources for the bridge module, I uncovered this piece of code, which applies some special handling for exactly DNATed packages on a bridge. It seems this is either a performance optimization, or a way to allow DNATing packets inside a bridge without having to enable full routing, though I find the exact effects of this code rather confusing.

I also found that setting the bridge device to promiscuous mode (e.g.

running tcpdump) makes everything work. Setting

/proc/sys/net/bridge/bridge-nf-call-iptables to 0 also makes this

work. This setting is to prevent bridged packets from passing through

iptables, but since this packet wasn't actually a bridged packet before

PREROUTING, this actually makes the packet be processed using the

normal routing code and progresses through all regular chains normally.

Here's what I think happens:

- The packet comes in

br_handle_frame - The frame gets dumped into the

NF_BR_PRE_ROUTINGnetfilter chain (e.g. the bridge / ebtables version, not the ip / iptables one). - The ebtables rules get called

- The

br_nf_pre_routinghook forNF_BR_PRE_ROUTINGgets called. This interrupts (returnsNF_STOLEN) the handling of theNF_BR_PRE_ROUTINGchain, and calls theNF_INET_PRE_ROUTINGchain. - The

br_nf_pre_routing_finishfinish handler gets called after completing theNF_INET_PRE_ROUTINGchain. - This handler resumes the handling of the interrupted

NF_BR_PRE_ROUTINGchain. However, because it detects that DNAT has happened, it sets the finish handler tobr_nf_pre_routing_finish_bridgeinstead of the regularbr_handle_frame_finishfinish handler. br_nf_pre_routing_finish_bridgeruns, this skb->dev to the parent bridge and sets theBRNF_BRIDGED_DNATflag which callsneigh->output(neigh, skb);which presumably resolves to one of theneigh_*outputfunctions, each of which again callsdev_queue_xmit, which should (eventually) callbr_dev_xmit.br_dev_xmitsees theBRNF_BRIDGED_DNATflag and callsbr_nf_pre_routing_finish_bridge_slowinstead of actually delivering the packet.br_nf_pre_routing_finish_bridge_slowsets up the destination MAC address, sets skb->dev back to skb->physindev and callsbr_handle_frame_finish.br_handle_frame_finishcallsbr_forward. If the bridge device is set to promisicuous mode, this also delivers the packet up throughbr_pass_frame_up. Since enabling promiscuous mode fixes my problem, it seems likely that the packet manages to get all the way to here.br_forwardcallsshould_deliver, which returnsfalsewhenskb->dev != p->dev(and "hairpin mode" is not enabled) causing the packet to be dropped.

This seems like a bug, or at least an unfortunate side effect. It seems there's currently two ways two work around this problem:

- Setting

/proc/sys/net/bridge/bridge-nf-call-iptablesto 0, so there is no need for thisDNAT+ bridge stuff. The side effect of this solution is that bridge packets don't go through iptables, but that's really what I'd have expected in the first place, so this is not a problem for me. - Setting the bridge port to "hairpin" mode, which allows sending ports back into it. The side effect here is, AFAICS, that broadcast packets are sent back into the bridge port as well, which isn't really needed (but shouldn't really hurt either).

Next up is reporting this to a kernel mailing list to confirm if there is an actual kernel bug, or just a bug in my expectations :-)

Update: Turns out this behaviour was previously spotted, but no concensus about a fix was reached.

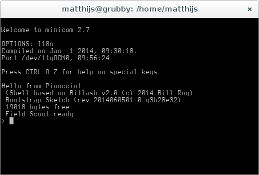

When working with an Arduino, you often want the serial console to stay open, for debugging. However, while you have the serial console open, uploading will not work (because the upload relies on the DTR pin going from high to low, which happens when opening up the serial port, but not if it's already open). The official IDE includes a serial console, which automatically closes when you start an upload (and once this pullrequest is merged, automatically reopens it again).

However, of course I'm not using the GUI serial console in the IDE, but minicom, a text-only serial console I can run inside my screen. Since the IDE (which I do use for compiling uploading, by calling it on the commandline using a Makefile - I still use vim for editing) does not know about my running minicom, uploading breaks.

I fixed this using some clever shell scripting and signal-passing. I

have an arduinoconsole script (that you can pass the port number to

open - pass 0 for /dev/ttyACM0) that opens up the serial console, and

when the console terminates, it is restarted when you press enter, or a

proper signal is received.

The other side of this is the Makefile I'm using, which kills the serial console before uploading and sends the restart signal after uploading. This means that usually the serial console is already open again before I switch to it (or, I can switch to it while still uploading and I'll know uploading is done because my serial console opens again).

For convenience, I pushed my scripts to a github repository, which makes it easy to keep them up-to-date too:

(This post has been lying around as a draft for a few years, thought I'd finish it up and publish it now that Tika has finally been put into production)

A few months years back, I purchased a new server together with some friends,

which we've named "Tika" (daughter of "Tita Tovenaar", both wizards

from a Dutch television series from the 70's). This name combine's

Daenney's "wizards and magicians" naming scheme with my "Television

shows from my youth" naming schemes quite neatly. :-)

It's a Supermicro 5015A rack server sporting an Atom D510 dual core processor, 4GB ram, 500GB of HD storage and recently added 128G of SSD storage. It is intended to replace Drsnuggles, my current HP DL360G2 (which has been very robust and loyal so far, but just draws too much power) as well as Daenney's Zeratul, an Apple Xserve. Both of our current machines draw around 180W, versus just around 20-30W for Tika. :-D You've got to love the Atom processor (and it probably outperforms our current hardware anyway, just by being over 5 years newer...).

Over the past three years, I've been working together with Daenney and Bas on setting up the software stack on Tika, which proved a bit more work than expected. We wanted to have a lot of cool things, like LXC containers, privilege separation for webapplications, a custom LDAP schema and a custom web frontend for user (self-)management, etc. Me being the perfectionist I am, it took quite some effort to get things done, also producing quite a number of bug reports, patches and custom scripts in the process.

Last week, we've finally put Tika into production. My previous server, drsnuggles had a hardware breakdown, which forced me wrap up Tika's configuration into something usable (which still took me a week, since I seem to be unable to compromise on perfection...). So now my e-mail, websites and IRC are working as expected on Tika, with the stuff from Bas and Daenney still needing to be migrated.

I also still have some draft postings lying around about Maroesja, the custom LDAP schema / user management setup we are using. I'll try to wrap those up in case others are interested. The user management frontend we envisioned hasn't been written yet, but we'll soon tire of manual LDAP modification and get to that, I expect :-)

Last week, I got a fancy new JTAGICE3 programmer / debugger. I wanted to achieve two things in my Pinoccio work: Faster uploading of programs (Having 256k of flash space is nice, but flashing so much code through a 115200 baud serial connection is slow...) and doing in-circuit debugging (stepping through code and dumping variables should turn out easier than adding serial prints and re-uploading every time).

In any case, the JTAGICE3 device is well-supported by avrdude, the

opensource uploader for AVR boards. However, unlike devices like the

STK500 development board, the AVR dragon programmer/debugger and the

Arduino bootloader, which use an (emulated) serial port to communicate,

the JTAGICE3 uses a native USB protocol. The upside is that the data

transfer rate is higher, but the downside is that the kernel doesn't

know how to talk to the device, so it doesn't expose something like

/dev/ttyUSB0 as for the other devices.

avrdude solves this by using libusb, which can talk to USB devices

directly, through files in /dev/usb/. However, by default these device

files are writable only by root, since the kernel has no idea what kind

of devices they are and whom to give permissions.

To solve this, we'll have to configure the udev daemon to create the

files in /dev/usb with the right permissions. I created a file called

/etc/udev/rules.d/99-local-jtagice3.rules, containg just this line:

SUBSYSTEM=="usb", ATTRS{idVendor}=="03eb", ATTRS{idProduct}=="2110", GROUP="dialout"

This matches the JTAGICE3 specifically using it's USB vidpid (03eb:2110,

use lsusb to find the id of a given device) and changes the group for

the device file to dialout (which is also used for serial devices on

Debian Linux), but you might want to use another group (don't forget to

add your own user to that group and log in again, in any case).

I recently got myself an Xprotolab Portable, which is essentially a tiny, portable 1Msps scope (in hindsight I might have better gotten the XMinilab Portable which is essentially the same, but slightly bigger, more expensive and with a bigger display. Given the size of the cables and carrying case, the extra size of the device itself is negligable, while the extra screen size is significant).

In any case, I wanted to update the firmware of the device, but the

instructions refer only to a Windows-only GUI utility from Atmel, called

"FLIP". I remembered seeing a flip.c file inside the avrdude sources

though, so I hoped I could also flash this device using avrdude on

Linux. And it worked! Turns out it's fairly simple.

- Activate the device's bootloader, by powering off, then press K1 and keep it pressed while turning the device back on with the menu key. The red led should light up, the screen will stay blank.

- Get the appropriate firmware hex files from the Xprotolab Portable page. You can find them at the "Hex" link in the top row of icons.

Run avrdude, for both the application and EEPROM contents:

sudo avrdude -c flip2 -p x32a4u -U application:w:xprotolab-p.hex:i sudo avrdude -c flip2 -p x32a4u -U eep:w:xprotolab-p.eep:iI'm running under sudo, since this needs raw USB access to the USB device. Alternatively, you can set up udev to offer access to your regular user (like I did for the JTAGICE3), but that's probably too much effort just for a one-off firmware update.

Done!

Note that you have to use avrdude version 6.1 or above, older versions don't support the FLIP protocol.

I just returned from the Twente Mini Maker Fair in Hengelo, where I saw a lot of cool makers and things. Eye-catchers were the "strandbeest" from Theo Janssen, a big walking contraption, powered by wind, made from PVC tubing, a host of different hackerspaces and fablabs, all kinds of cool technology projects for kids from the Technlogy Museum Heim and all kinds of cool buttonsy projects from the enthousiastic Herman Kopinga. All this in a cool industrial atmosphere of an old electrical devices factory.

Also nice to meet some old and new familiar people. I ran into Leo Simons, with whom I played theatre sports at Pro Deo years ago. He was now working with his father and brother on the Portobello, a liquid resin based 3D printer, which looked quite promising. I also ran into Edwin Dertien (also familiar from Pro Deo as well as the Gonnagles), whom seemed to be on the organising side of some lectures during the faire. I attended one lecture by Harmen Zijp and Diana Wildschut (which form the Amersfoort-based art group "de Spullenmannen") about the overlap and interaction between art and science, which was nice. I also ran into Govert Combée, a LARPer I knew from Enschede.

Overall, this was a nice place to visit. Lots of cool stuff to see and play with, lots of interesting people. There was also a nice balance of technology vs art, and electronics vs "regular" projects: Nice to see that the Maker mindset appeals to all kinds of different people!

Okay, so I'm gonna build a system to do administration tasks in our LARP club. But, what exactly are these? What should this system actually do for us? I've given this question a lot of thought and these are my notes and thoughts, hopefully structured in a useful and readable way. I've had some help of Brenda so far in writing some of these down, but I'll appreciate any comments you can think of (including "hey wouldn't it be cool if the system could do x?", or "Don't you think y is really a bad idea?"). Also, I am still open for suggestions regarding a name.

General outline

The general idea of the system is to simplify various administration tasks in a LARP club. These tasks include (but are not limited to) managing event information, player information, event subscriptions, character information, rule information (skill lists, spells, etc), etc.

This information should be managable by different cooperating organisers and to some extent by the players themselves. We loosely divide the information into OC information (info centered around players) and IC information (info centered around characters). OC information is plainly editable by players or organisers, where appropriate. IC information is generally editable by organisers and players can propose changes (but only for their own characters). These changes have to be approved by an organiser before being applied.

The information should be exported in various (configurable and/or adaptable) formats, such as a list of subscribed players with payment info, a PDF containing character sheets to be printed or a list of spells for on the main website. Since the exact requirements of each club and/or event with regard to this output vary, there should be some kind of way to easily change this output.

Users

There will be four groups of users.

- Admins. Admins can modify pretty much everything and perform user management.

- Organisers. Organisers are the lassical "power users", they can freely modify most information. The players on the other hand have limited options.

- Members. Members are people who have logged into the systen and/or subscribed for an event.

- Visitors. Visitors are everybody else. Everyone who is not logged in is a visitor and may or may not have access to information in the system (generally only read-only).

Possibly, the first two groups can be unified into one admin group, if the userbase is small.

OC part

The OC part of the system will mainly keep data about persons, events and registrations.

Data

For every person, we keep:

- Name, address, etc.

- Medical information, allergies, etc.

- Vegetarian

- First aid

- Glasses/contacts

For every event we keep:

- Name (ie "Exodus", "Symbols", etc)

- Title (ie, "Symbols 3: Calm")

- Date

It would be nice to also keep different registration options (NPC/PC, with food/without food) with different prices and corresponding deadlines, etc. Since this probably requires a lot of overhead and probably organisation-specific code, so this might be simplified to some hardcodedness in the subscription form and some hand work.

Lastly, we keep information about registrations. A registration is essentially a many-to-many relation between persons and events, with some extra information.

For every registration we keep:

- Date of registration

- Type (NPC/PC/etc)

- Other options (food/no food, etc)

- Character(s?) to be played during the event

Lastly, the system should have some knowledge about payments. The most ideal way to this would be to keep a list of payments, together with amount, date and registration the payment is for. This allows for multiple payments for one registration and allows you to keep a direct mapping between the system and account statements.

The simple way would be just storing the payment date for every registration. This means that partial payments cannot be saved by the system, but is significantly easier to implement. Also, this solution can also be extended to the more complicated solution above.

IC part

Okay, now for the interesting part. Since the system will be used for multiple events, it should be general and customizable. I will start out here with the general part and save the exact requirements for different events for later.

The main piece of data is a character. It should have a name, date of birth, physical description, that sort of things (probably different for different events). This information is all simple and textual. Furthermore, a character should have a larger description and/or background. This is still mainly textual, but should be somewhat formatable (ie, wiki-ish).

Also, a character has an owner. This is the player or NPC that plays the character. Characters could have no owner, in which case the character is not bound to a particular NPC since it has not been played yet, has been played multiple times, etc. The owner is able to see most information about a character and propose changes to it.

Furthermore, the system will know which player has played which character at which event. This is partly stored in the info about registration (what character a player wants to play), but should probably also be stored seperately (what character(s) were actually played). This also enables linking multiple characters to one NPC. Also, there should be room for feedback and/or remarks from the player that played the character.

Lastly, the system knows about the rule data that represent the character: different stats, abilities, skills or whatever is defined for this particular event.

Especially this last part is interesting, because it is not simply modifiable by the character owner. Some kind of moderating system should be in place here, where people can do stuff like "buying" skills. These actions should probably be grouped together and presented to the storytellers as a single "character change proposal", which needs to be approved. It is probably useful to not just use this moderation for skill changes, but for all data, since this requires a storyteller to have looked at all character changes (which is wanted).

Other considerations

- Most objects in the system should be able to store general "remarks", possibly comments (together with time and author), or simpler just a free form text field.

- Most objects should be taggable. That is, it should be possible to assign free-form textual tags to different objects, such as "Primogen" to characters or "Crew" to persons. These tags are generally not used by the system itself, but are should be assigned meaning by the users themselves (though some special tags might be used by the system).

- All objects should have most of their properties defined at runtime. In other words, the actual data stored about a person, should be defined in the database and be modifiable at runtime without modifying code or database structure. This enables different organisations to define different fields with low overhead.

- There should be multiple different events in the same system (ie, Lextalionis and Exodus). They should (probably) share the same OC logic and fields, but not the IC part.

- All this data should be readily available on-site (on the event, that is). This means that just before an event, the data should be frozen (ie, made read-only) and the master copy transfered to a different machine that can be taken to site. Also, there should be some way of making this data readable without having a webserver running (ie for offline viewing on a laptop).

Parts of the data should be versioned. This means that it should be possible to, for example, show the state of a character as it was during the first Symbols event. All character (or all IC) data should be versioned. All OC data is generally unmutable, such as registrations and events. Only personal data will change often, but is probably not too useful to save historic personal data.

It should be possible to timestamp all versioned objects at a specific moment, using a custom name (such as "pre-Exodus-1" or something). Since changes to characters should be approved by organisers before being applied, individual changes should be stored by the system anyway. It is therefore not too hard to assign timestamps to these.

Use cases

This is a random list of stuff that should be possible with the system.

- Send mails to (groups of) users (perhaps automatically for paymentsdeadlines)

- Generate a list of all (food) allergies

- Generate a list of all registrations and payment status.

- A list of characters in a world (like the Dramatis Personae of lextalionis, probably only include characters with a specific tag).

- Generate character sheets

- Keep info about which characters have already been generated and which characters have been changed since.

For members

- List the available events, together with status info (registered, payed)

- List your current registrations (possibly allow changes?)

- Change personal data (also show the current data at every registration)

- Send confirmation email on every registration (including payment info)

- Allow to create a character after registration

Authentication

To identify users, we need some kind of authentication. To prevent users from needing yet another login, we will reuse the authentication from our forums. There is no need for the organisers to explicitely link persons to forum logins or something like that, since the first time someone logs in to the system, he can fill out his personal data and register for events.

There should be some general way to perform authorization on various data. In other words, one should be able to specify what users have access rights over what data (possibly on an object level, or perhaps for individual properties or entire reports).

In my work as a Debian Maintainer for the OpenTTD and related packages, I occasionally come across platform-specific problems. That is, compiling and running OpenTTD works fine on my own x86 and amd64 systems, but when I my packages to Debian, it turns out there is some problem that only occurs on more obscure platforms like MIPS, S390 or GNU Hurd.

This morning, I saw that my new grfcodec package is not working on a bunch of architectures (it seems all of the failing architectures are big endian). To find out what's wrong, I'll need to have a machine running one of those architectures so I can debug.

In the past, I've requested access to Debian's "porter" machines, which are intended for these kinds of things. But that's always a hassle, which requires other people's time to set up, so I'm using QEMU to set up a virtual machine running the MIPS architecture now.

What follows is essentially an update for this excellent tutorial about running Debian Etch on QEMU/MIPS(EL) by Aurélien Jarno I found. It's probably best to read that tutorial as well, I'll only give the short version, updated for Squeeze. I've also looked at this tutorial on running Squeeze on QEMU/PowerPC by Uwe Hermann.

Finally, note that Aurélien also has pre-built images available for download, for a whole bunch of platforms, including Squeeze on MIPS. I only noticed this after writing this tutorial, might have saved me a bunch of work ;-p

Preparations

You'll need qemu. The version in Debian Squeeze is sufficient, so

just install the qemu package:

$ aptitude install qemu

You'll need a virtual disk to install Debian Squeeze on:

$ qemu-img create -f qcow2 debian_mips.qcow2 2G

You'll need a debian-installer kernel and initrd to boot from:

$ wget http://ftp.de.debian.org/debian/dists/squeeze/main/installer-mips/current/images/malta/netboot/initrd.gz

$ wget http://ftp.de.debian.org/debian/dists/squeeze/main/installer-mips/current/images/malta/netboot/vmlinux-2.6.32-5-4kc-malta

Note that in Aurélien's tutorial, he used a "qemu" flavoured

installer. It seems this is not longer available in Squeeze, just a few

others (malta, r4k-ip22, r5k-ip32, sb1-bcm91250a). I just picked

the first one and apparently that one works on QEMU.

Also, note that Uwe's PowerPC tutorial suggests downloading a iso cd image and booting from that. I tried that, but QEMU has no BIOS available for MIPS, so this approach didn't work. Instead, you should tell QEMU about the kernel and initrd and let it load them directly.

Booting the installer

You just run QEMU, pointing it at the installer kernel and initrd and passing some extra kernel options to keep it in text mode:

$ qemu-system-mips -hda debian_mips.qcow2 -kernel vmlinux-2.6.32-5-4kc-malta -initrd initrd.gz -append "root=/dev/ram console=ttyS0" -nographic

Now, you get a Debian installer, which you should complete normally.

As Aurélien also noted, you can ignore the error about a missing boot loader, since QEMU will be directly loading the kernel anyway.

After installation is completed and the virtual system is rebooting, terminate QEMU:

$ killall qemu-system-mips

(I haven't found another way of terminating a -nographic QEMU...)

Booting the system

Booting the system is very similar to booting the installer, but we leave out the initrd and point the kernel to the real root filesystem instead.

Note that this boots using the installer kernel. If you later upgrade the kernel inside the system, you'll need to copy the kernel out from /boot in the virtual system into the host system and use that to boot. QEMU will not look inside the virtual disk for a kernel to boot automagically.

$ qemu-system-mips -hda debian_mips.qcow2 -kernel vmlinux-2.6.32-5-4kc-malta -append "root=/dev/sda1 console=ttyS0" -nographic

More features

Be sure to check Aurélien's tutorial for some more features, options and details.

Comments are closed for this story.