These are the ramblings of Matthijs Kooijman, concerning the software he hacks on, hobbies he has and occasionally his personal life.

Most content on this site is licensed under the WTFPL, version 2 (details).

Questions? Praise? Blame? Feel free to contact me.

My old blog (pre-2006) is also still available.

See also my Mastodon page.

| Sun | Mon | Tue | Wed | Thu | Fri | Sat |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 |

(...), Arduino, AVR, BaRef, Blosxom, Book, Busy, C++, Charity, Debian, Electronics, Examination, Firefox, Flash, Framework, FreeBSD, Gnome, Hardware, Inter-Actief, IRC, JTAG, LARP, Layout, Linux, Madness, Mail, Math, MS-1013, Mutt, Nerd, Notebook, Optimization, Personal, Plugins, Protocol, QEMU, Random, Rant, Repair, S270, Sailing, Samba, Sanquin, Script, Sleep, Software, SSH, Study, Supermicro, Symbols, Tika, Travel, Trivia, USB, Windows, Work, X201, Xanthe, XBee

&

&

(With plugins: config, extensionless, hide, tagging, Markdown, macros, breadcrumbs, calendar, directorybrowse, entries_index, feedback, flavourdir, include, interpolate_fancy, listplugins, menu, moreentries, pagetype, preview, seemore, storynum, storytitle, writeback_recent)

Valid XHTML 1.0 Strict & CSS

A I previously mentioned, Gnome3 is migrating away from the gconf settings storage to the to GSettings settings API (along with the default dconf settings storage backend).

So where you previously used the gconf-editor program to browse and

edit Gnome settings, you can now use dconf-editor to browse and

edit settings.

I do wonder if the name actually implies that dconf-editor is editing

the dconf storage directly, instead of using the fancy new GSettings

API? :-S

Since 2010, I've been working as a Freelancer (ZZP'er). Of course I registered a domain name right away, but until now, I didn't get around to actually putting a website on there (just as single page with boring markup and some contact information).

Last week, I created an actual website. It's still not spectacular, but at least it has some actual content and a few pages etc. So, with a modest amount of pride, I present: the website of Matthijs Kooijman IT.

For anyone wondering: I didn't put up some bloated CMS, running on a database, but I store my content in a handful of text files (using Markdown markup) and using poole to translate those to (static) HTML files. I keep the files in git and set up a git post-update hook to generate the HTML to make things extra convenient.

To be able to debug a bug in OpenTTD that only occured on SPARC machines, I needed an old SPARC machine so I could reproduce and hopefully fix the bug. After some inquiring at our local hackerspace, Bitlair (which had a few SPARC32 machines lying around, but I needed SPARC64), I got in touch with The_Niz from NURDSpace, the hackerspace in Wageningen. Surprisingly, it turned out I actually knew The_Niz already through work :-)

In any case, The_Niz was kind enough to lend me a SPARC Ultra1 workstation and Sun keyboard, and r3boot gave me a Sun monitor I could use (of course Sun hardware doesn't use a regular VGA connector...).

There was one caveat, though: The NVRAM battery in the Ultra1 was dead. The NVRAM chip stores the boot settings (like the BIOS settings in a regular PC), but also the serial number and MAC address of the machine (called the IDPROM info). Without those settings, you'll have to manually select the boot device on every boot (by typing commands at a prompt) and netbooting does not work, for lack of a MAC address (I presume regular networking, e.g., from within Linux, does not work either, but I haven't tested that yet).

Sun has taken an interesting approach to their NVRAM chip. Where most machines just use a piece of EEPROM (Flash) memory, which does not need power to remember its contents, Sun has used a piece of RAM memory (which needs power to remember its contents) combined with a small rechargeable battery.

This means that when the machine is not used for a

long time or is very old, the battery will eventually die, causing the

machine to spit out messages like "The IDPROM contents are

invalid and "Internal loopback test -- Did not receive expected

loopback packet".

I needed to install Debian on this Ultra1, so I needed some way to boot the installer. Since netbooting did not work, my first attempt was to ignore the NVRAM problem and just get the machine to boot off a Debian boot cd. This did not quite work out: the cdrom player in the Ultra1 (a 4x burner connected through SCSI) didn't like any of the CD-Rs and CD-RWs I threw at it. It spat out errors like "Illegal Instruction", "Program Terminated" or "SProgram Terminated" (where the first "S" is the start of "SILO", the SPARC bootloader).

As we all remember from a long time ago, the early generation CD-ROM players were quite picky with burnt CDs, so also this one. I found some advice online to burn my CDs at a lower speed (apparently the drive was rumoured to break on discs burned at 4x or higher), but my drive or CD-Rs didn't support writing slower than 8x... I could write my CD-RW at 4x and at one occasion I managed to boot the installer from a CD-RW and succesfully (but very, very slowly) scan the CD-RW contents, but then it broke with read errors when trying to actually load files from the CD-RW.

So, having no usable CD-ROM drive to boot from, I really had to get netboot going. Apparently it is possible (and even easy and not so expensive) to replace the NVRAM chip, but I didn't feel like waiting for one to be shipped. There is a FAQ available online with extensive documentation about the NVRAM chip and instructions on how to reprogram the IDPROM part with valid contents.

So, I reckoned that the battery was only needed when the machine was powered down, so I should be able to reprogram the IDPROM info and then just not poweroff the system, right?

Turns out that works perfectly. I reprogrammed the IDPROM using the MAC

address I read off the sticker on the NVRAM chip and made up a dummy

serial number. For some reason, the mkpl command did not work, I had

to use the more verbose mkp command. Afterwards, I gave the reset

command and the "Internal loopback test" error was gone and the machine

started netbooting, using RARP and TFTP.

By now, I've managed to install Debian, get Xorg working and reproduce the bug in OpenTTD, so time for fixing it :-D

For some time, I've been looking for a decent backup solution. Such a solution should:

- be completely unattended,

- do off-site backups (and possibly onsite as well)

- be affordable (say, €5 per month max)

- run on Linux (both desktops and headless servers)

- offer plenty of space (couple of hundred gigabytes)

Up until now I haven't found anything that met my demands. Most backup solutions don't run on (headless Linux) and most generic cloud storage providers are way too expensive (because they offer high-availability, high-performance storage, which I don't really need).

Backblaze seemed interesting when they launched a few years ago. They just took enormous piles of COTS hard disks and crammed a couple dozen of them in a custom designed case, to get a lot of cheap storage. They offered an unlimited backup plan, for only a few euros per month. Ideal, but it only works with their own backup client (no normal FTP/DAV/whatever supported), which (still) does not run on Linux.

Crashplan

Recently, I had another look around and found CrashPlan, which offers an unlimited backup plan for only $5 per month (note that they advertise with $3 per month, but that is only when you pay in advance for four years of subscription, which is a bit much. Given that if you cancel beforehand, you will still get a refund of any remaining months, paying up front might still be a good idea, though). They also offer a family pack, which allows you to run CrashPlan on up to 10 computers for just over twice the price of a single license. I'll probably get one of these, to backup my laptop, Brenda's laptop and my colocated server.

The best part is that the CrashPlan software runs on Linux, and even on a headless Linux server (which is not officially supported, but CrashPlan does document the setup needed). The headless setup is possible because CrashPlan runs a daemon (as root) that takes care of all the actual work, while the GUI connects to the daemon through a TCP port. I still need to double-check what this means for the security though (especially on a multi-user system, I don't want to every user with localhost TCP access to be able to administer my backups), but it seems that CrashPlan can be configured to require the account password when the GUI connects to the daemon.

The CrashPlan software itself is free and allows you to do local backups and backups to other computers running CrashPlan (either running under your own account, or computers of friends running on separate accounts). Another cool feature is that it keeps multiple snapshots of each file in the backup, so you can even get back a previous version of a file you messed up. This part is entirely configurable, but by default it keeps up to one snapshot every 15 minutes for recent changes, and reduces that to one snapshot for every month for snapshots over a year old.

When you pay for a subscription, the software transforms into CrashPlan+ (no reinstall required) and you get extra features such as multiple backup sets, automatic software upgrades and most notably, access to the CrashPlan Central cloud storage.

I've been running the CrashPlan software for a few days now (it comes with a 30-day free trial of the unlimited subscription) and so far, I'm quite content with it. It's been backing up my homedir to a local USB disk and into the cloud automatically, I don't need to check up on it every time.

The CrashPlan runs on Java, which I doesn't usually make me particularly enthousiastic. However, the software seems to run fast and reliable so far, so I'm not complaining. Regarding the software itself, it does seem to me that it's not intended for micromanaging. For example, when my external USB disk is not mounted, the interface shows "Destination unavailable". When I then power on and mount the external disk, it takes some time for Crashplan to find out about this and in the meanwhile, there's no button in the interface to convince CrashPlan to recheck the disk. Also, I can add a list of filenames/path patterns to ignore, but there's not really any way to test these regexes.

Having said that, the software seems to do its job nicely if you just let it do its job in the background. On piece of micromanagement which I do like is that you can manually pause and resume the backups. If you pause the backups, they'll be automatically resumed after 24 hours, which is useful if the backups are somehow bothering you, without the risk that you forget to turn the backups back on.

Backing up only when docked

Of course, sending away backups is nice when I am at home and have 50Mbit fiber available, but when I'm on the road, running on some wifi or even 3G connection, I really don't want to load my connection with the sending of backup data.

Of course I can manually pause the backups, but I don't want to be doing that every time when I pick up my laptop and get moving. Since I'm using a docking station, it makes sense to simply pause backups whenever I undock and resume them when I dock again.

The obvious way to implement this would be to simply stop the CrashPlan daemon when undocking, but when I do that, the CrashPlanDesktop GUI becomes unresponsive (and does not recover when the daemon is started again).

So, I had a look at the "admin console", which offers "command

line" commands, such as pause and resume. However, this command line

seems to be available only inside the GUI, which is a bit hard to

script (also note that not all of the commands seem to work for me,

sleep and help seem to be unknown commands, which cause the console

to close without an error message, just like when I just type something

random).

It seems that these console commands are really just sent verbatim to the CrashPlan daemon. Googling around a bit more, I found a small script for CrashPlan PRO (the business version of their software), which allows sending commands to the daemon through a shell script. I made some modifications to this script to make it useful for me:

- don't depend on the current working dir, hardcode

/usr/local/crashplanin the script instead - fixed a bashism (

==vs=) - removed

-XstartOnFirstThreadargument from java (MacOS only?) - don't store the commands to send in a separate

$commandbut instead pass "$@" to java directly. This latter prevents bash from splitting arguments with spaces in them into multiple arguments, which causes the command "pause 9999" to be interpreted as two commands instead of one with an argument.

I have this script under /usr/local/bin/CrashPlanCommand:

#!/bin/sh

BASE_DIR=/usr/local/crashplan

if [ "x$@" == "x" ] ; then

echo "Usage: $0 <command> [<command>...]"

exit

fi

hostPort=localhost:4243

echo "Connecting to $hostPort"

echo "Executing $@"

CP=.

for f in `ls $BASE_DIR/lib/*.jar`; do

CP=${CP}:$f

done

java -classpath $CP com.backup42.service.ui.client.ConsoleApp $hostPort "$@"

Now I can run CrashPlanCommand 'pause 9999' and CrashPlanCommand

resume to pause and resume the backups (9999 is the number of minutes

to pause, which is about a week, since I might be undocked more than 24

hourse, which is the default pause time).

To make this run automatically on undock, I created a simply udev rules

file as /etc/udev/rules.d/10-local-crashplan-dock.rules:

ACTION=="change", ATTR{docked}=="0", ATTR{type}=="dock_station", RUN+="/usr/local/bin/CrashPlanCommand 'pause 9999'"

ACTION=="change", ATTR{docked}=="1", ATTR{type}=="dock_station", RUN+="/usr/local/bin/CrashPlanCommand resume"

And voilà! Automatica pausing and resuming on undocking/docking of my laptop!

Last weekend, I've been at the winter Efteling. The Efteling opens up for a few weekends during the cold season, with an icy theme. Last weekend was the first. Brenda had been buying a lot of Madras Chicken from Knorr, which bought us six tickets at a discount. We offered two to Dennis and Alice and gave the other two to Suus and Gijs for their respective birthdays.

We also knew about a few other friends (LARPers) who would go in the same

weekend, but a whole throng of LARPers turned up last Saturday (about 25 to 30

I think). Apart from that, it wasn't too busy at the park, so there were

hardly any lines during the day. I have a nice souvenir picture from the Pandadroom

(Panda dream) attraction (since everyone knows the Pandadroom isn't so much

fun because the fancy 3D animation, but because of the playground with video

games that comes after!).

We also knew about a few other friends (LARPers) who would go in the same

weekend, but a whole throng of LARPers turned up last Saturday (about 25 to 30

I think). Apart from that, it wasn't too busy at the park, so there were

hardly any lines during the day. I have a nice souvenir picture from the Pandadroom

(Panda dream) attraction (since everyone knows the Pandadroom isn't so much

fun because the fancy 3D animation, but because of the playground with video

games that comes after!).

Since we did about every attraction in the park, my brains were upside down in my skull and I was pretty exhausted when we finally got on the train home. Yet, I was not headed home, since my aunt held her birthday in Eindhoven, which is pretty close to the Efteling. I joined in on the party and was not in bed before 0530.

I went home the next day after breakfast (halfway the afternoon), back to Brenda. As you might imagine, the exhaustion had hardly lessened, so I decided not to get up together with Brenda Monday morning at 0650. Good thing, since I managed to sleep until 1300 for a whopping 13,5 hours of sleep. Didn't know I was that tired...

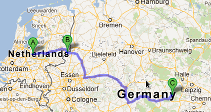

Last weekend, I've travelled to Mattstedt, a small village near the center of Germany, to attend a folk festival there. The Gonnagles, a Dutch folk band, were performing at the festival and asked me to help out as an audio technician (since not all of the Gonnagles could attend). Liking the opportunity to do some more sound engineering, do some dancing and hang out with the Gonnagles, I agreed to come along.

After I said yes, I realized that the Festival was quite far into Germany (5 hours from Enschede, though on the way there, we got caught in a traffic jam making it 7,5 hours, plus 1,5 hours from Amersfoort to Enschede). Still, I shared the car with nice people (Erik and Moes on the way there, just Erik on the way back) and the festival was nice, so in the end it was worth every minute of driving time. Moes, Erik and I also travelled on friday instead of saturday, leaving an extra fridaynight for dancing and saturday afternoon for relaxing.

During the festival, I ended up helping out with the audio engineering of the rest of the festival, instead of just the Gonnagles' performance. There were two audio technicians there, but neither of them were very experienced in setting up a live performance and getting to the bottom of any problems that (always) show up. Also, the rented equipmented wasn't quite top-notch quality, which didn't really help either.

Fortunately, I was able to help out a bit and debug some problems with the PA system and monitor speakers. On saturday, I also did most of the mixing for Cassis, one of the other bands. Apparently people thought I did a good job, since I got a lot of thanks from people who apparently thought I single-handedly saved the festival from horrible sound quality (which would be underappreciating the other technicians, which also worked hard to get everything running).

The festival itself was nice as well. I always enjoy the performance of Parasol, who are very talented, but I also enjoyed the other bands. The weather was good, so I had some nice relaxed moments lying in the sun, I listened to good music, shared some nice dances with a pretty German girl, and just had fun.

So, again next year?

For the occasion of my fourtieth blood donation at the Sanquin blood bank, I got a nice pluche pelikan (which is their mascotte / logo). It got a nice spot on top of one of the speakers on my desk, where it has a nice view of my room.

Are you a blood donor yet? More donors are still needed in the Netherlands, so sign up now!